Operation and Maintenance

Operation and Maintenance

Linux Operation and Maintenance

Linux Operation and Maintenance

The 5 Pillars of Linux: Understanding Their Roles

The 5 Pillars of Linux: Understanding Their Roles

The 5 Pillars of Linux: Understanding Their Roles

Apr 11, 2025 am 12:07 AMThe five pillars of the Linux system are: 1. Kernel, 2. System library, 3. Shell, 4. File system, 5. System tools. The kernel manages hardware resources and provides basic services; the system library provides precompiled functions for applications; the shell is the interface for users to interact with the system; the file system organizes and stores data; and system tools are used for system management and maintenance.

introduction

The charm of Linux systems lies in their flexibility and powerful capabilities, and all of this cannot be separated from the support of its core components. Today we will discuss the five pillars of the Linux system: kernel, system library, shell, file system and system tools. By understanding the role of these pillars, you will have a better understanding of the working mechanism of Linux systems and be able to make more efficient use of their capabilities. Whether you are a new Linux or a veteran, this article can provide you with some new insights and practical tips.

Review of basic knowledge

Linux System is a Unix-based operating system first released by Linus Torvalds in 1991. Its design philosophy is open source, freedom and flexibility. A Linux system consists of multiple components, each with its unique functions and functions.

The kernel is the core of the Linux system, which is responsible for managing hardware resources and providing basic services. The system library is a set of precompiled functions and programs that are provided for use by programs. Shell is the interface for users to interact with the operating system, the file system is responsible for organizing and storing data, while the system tools are a set of utilities used to manage and maintain the system.

Core concept or function analysis

Kernel: Linux's core

The kernel is the core part of the Linux system and is responsible for managing the system's hardware resources, such as CPU, memory, hard disk, etc. It also provides basic services such as process scheduling, memory management, file system management, etc. The kernel is designed to be efficient, stable and scalable.

// Kernel module example#include <linux/module.h>

#include <linux/kernel.h>

int init_module(void)

{

printk(KERN_INFO "Hello, world - this is a kernel module\n");

return 0;

}

void cleanup_module(void)

{

printk(KERN_INFO "Goodbye, world - this was a kernel module\n");

}

MODULE_LICENSE("GPL");

MODULE_AUTHOR("Your Name");

MODULE_DESCRIPTION("A simple kernel module");

MODULE_VERSION("1.0");Kernel modules are an important feature of the kernel, which allows developers to dynamically load and uninstall functions without restarting the system. The above code shows a simple kernel module that outputs "Hello, world" when loading and "Goodbye, world" when unloading.

System Library: The cornerstone of an application

The system library is a set of precompiled functions and programs that are provided for use by programs. They provide common functions such as file operations, network communications, graphical interfaces, etc. The use of system libraries can greatly simplify the application development process and improve the reusability and maintainability of the code.

// Example of using system library #include <stdio.h>

#include <stdlib.h>

int main() {

FILE *file = fopen("example.txt", "w");

if (file == NULL) {

perror("Error opening file");

return 1;

}

fprintf(file, "Hello, world!\n");

fclose(file);

return 0;

} The above code uses stdio.h and stdlib.h in the standard C library, and implements file creation and writing operations through fopen , fprintf and fclose functions.

Shell: The bridge between users and systems

Shell is the interface for users to interact with the operating system, which accepts the user's commands and passes them to the operating system for execution. Shell can not only execute simple commands, but also write complex scripts to automate tasks.

#!/bin/bash

# Simple Shell script example echo "Hello, world!"

for i in {1..5}

do

echo "Iteration $i"

doneThe above script shows the basic usage of Shell, including outputting text and using loop structures. The flexibility and power of shell scripts make it an important tool for Linux system management and automation.

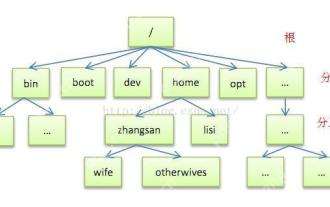

File system: data organizer

The file system is responsible for organizing and storing data, which defines the structure and access of files and directories. Linux supports a variety of file systems, such as ext4, XFS, Btrfs, etc. Each file system has its own unique features and application scenarios.

# View file system information df -h # Create a new directory mkdir new_directory # Copy file cp source_file destination_file # delete file rm unwanted_file

The above commands show some basic operations of the file system, including viewing file system information, creating directories, copying and deleting files. The design and management of file systems are crucial to system performance and data security.

System tools: a powerful tool for system management

System tools are a set of utilities used to manage and maintain Linux systems. They include system monitoring, backup, recovery, network management and other functions. The use of system tools can greatly simplify system management tasks and improve system stability and security.

# Check system resource usage top # View system log journalctl # Backup file tar -czvf backup.tar.gz /path/to/directory # Recover file tar -xzvf backup.tar.gz -C /path/to/restore

The above commands show some commonly used system tools, including top for monitoring system resources, journalctl for viewing system logs, and tar for backing up and restoring files. The selection and use of system tools need to be determined based on specific needs and environment.

Example of usage

Basic usage

In daily use, we often need to use these pillars to complete various tasks. For example, use the shell to execute commands, use the file system to manage data, and use system tools to monitor system status.

# Use Shell to execute the command ls -l # Use file system to manage data mv old_file new_file # Use system tools to monitor system status free -h

The above commands show the basic usage of these pillars, including listing files, moving files, and viewing memory usage.

Advanced Usage

In more complex scenarios, we can combine these pillars to achieve more advanced functionality. For example, use Shell scripts to automate system management tasks and use system tools to optimize performance.

#!/bin/bash

# Example of Shell script for automated system management tasks echo "Starting system maintenance..."

# Clean up temporary files find /tmp -type f -mtime 7 -delete

# Check disk usage df -h | awk '$5 > 80 {print $0}'

# Backup important data tar -czvf /backup/important_data.tar.gz /path/to/important_data

echo "System maintenance completed."The above scripts show how to use Shell scripts to automate system management tasks, including cleaning temporary files, checking disk usage, and backing up important data.

Common Errors and Debugging Tips

There are some common mistakes and problems that may occur when using these pillars. For example, syntax errors in shell scripts, file system permission issues, system tool configuration errors, etc.

- Syntax error in shell script : Use

bash -n script.shto check script syntax error. - File system permissions issue : Use

chmodandchowncommands to modify permissions and ownership of files and directories. - System tool configuration error : Read the tool documentation carefully to ensure the correctness of the configuration file.

Performance optimization and best practices

In practical applications, we need to continuously optimize the performance and efficiency of the system. Here are some recommendations for optimization and best practices:

- Kernel optimization : Adjust kernel parameters, such as

sysctlcommand, according to the specific needs of the system. - Selection of system library : Select the appropriate system library according to the needs of the application to avoid unnecessary dependencies.

- Optimization of Shell scripts : Use

timecommands to measure the execution time of the script, optimize the logic and efficiency of the script. - File system optimization : Select the appropriate file system and regularly maintain and optimize the file system, such as the

fsckcommand. - Optimization of system tools : Select and configure appropriate system tools according to the specific needs of the system, and regularly monitor and maintain the system.

By understanding and mastering the five pillars of the Linux system, we can better understand and utilize the powerful functions of the Linux system. In practical applications, the flexibly using these pillars can greatly improve the efficiency and stability of the system. Hopefully this article provides you with some useful insights and practical tips to help you easily in the Linux world.

The above is the detailed content of The 5 Pillars of Linux: Understanding Their Roles. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Postman Integrated Application on CentOS

May 19, 2025 pm 08:00 PM

Postman Integrated Application on CentOS

May 19, 2025 pm 08:00 PM

Integrating Postman applications on CentOS can be achieved through a variety of methods. The following are the detailed steps and suggestions: Install Postman by downloading the installation package to download Postman's Linux version installation package: Visit Postman's official website and select the version suitable for Linux to download. Unzip the installation package: Use the following command to unzip the installation package to the specified directory, for example /opt: sudotar-xzfpostman-linux-x64-xx.xx.xx.tar.gz-C/opt Please note that "postman-linux-x64-xx.xx.xx.tar.gz" is replaced by the file name you actually downloaded. Create symbols

Detailed introduction to each directory of Linux and each directory (reprinted)

May 22, 2025 pm 07:54 PM

Detailed introduction to each directory of Linux and each directory (reprinted)

May 22, 2025 pm 07:54 PM

[Common Directory Description] Directory/bin stores binary executable files (ls, cat, mkdir, etc.), and common commands are generally here. /etc stores system management and configuration files/home stores all user files. The root directory of the user's home directory is the basis of the user's home directory. For example, the home directory of the user user is /home/user. You can use ~user to represent /usr to store system applications. The more important directory /usr/local Local system administrator software installation directory (install system-level applications). This is the largest directory, and almost all the applications and files to be used are in this directory. /usr/x11r6?Directory for storing x?window/usr/bin?Many

Where is the pycharm interpreter?

May 23, 2025 pm 10:09 PM

Where is the pycharm interpreter?

May 23, 2025 pm 10:09 PM

Setting the location of the interpreter in PyCharm can be achieved through the following steps: 1. Open PyCharm, click the "File" menu, and select "Settings" or "Preferences". 2. Find and click "Project:[Your Project Name]" and select "PythonInterpreter". 3. Click "AddInterpreter", select "SystemInterpreter", browse to the Python installation directory, select the Python executable file, and click "OK". When setting up the interpreter, you need to pay attention to path correctness, version compatibility and the use of the virtual environment to ensure the smooth operation of the project.

The difference between programming in Java and other languages ??Analysis of the advantages of cross-platform features of Java

May 20, 2025 pm 08:21 PM

The difference between programming in Java and other languages ??Analysis of the advantages of cross-platform features of Java

May 20, 2025 pm 08:21 PM

The main difference between Java and other programming languages ??is its cross-platform feature of "writing at once, running everywhere". 1. The syntax of Java is close to C, but it removes pointer operations that are prone to errors, making it suitable for large enterprise applications. 2. Compared with Python, Java has more advantages in performance and large-scale data processing. The cross-platform advantage of Java stems from the Java virtual machine (JVM), which can run the same bytecode on different platforms, simplifying development and deployment, but be careful to avoid using platform-specific APIs to maintain cross-platformity.

After installing Nginx, the configuration file path and initial settings

May 16, 2025 pm 10:54 PM

After installing Nginx, the configuration file path and initial settings

May 16, 2025 pm 10:54 PM

Understanding Nginx's configuration file path and initial settings is very important because it is the first step in optimizing and managing a web server. 1) The configuration file path is usually /etc/nginx/nginx.conf. The syntax can be found and tested using the nginx-t command. 2) The initial settings include global settings (such as user, worker_processes) and HTTP settings (such as include, log_format). These settings allow customization and extension according to requirements. Incorrect configuration may lead to performance issues and security vulnerabilities.

MySQL installation tutorial teach you step by step the detailed steps for installing and configuration of mySQL step by step

May 23, 2025 am 06:09 AM

MySQL installation tutorial teach you step by step the detailed steps for installing and configuration of mySQL step by step

May 23, 2025 am 06:09 AM

The installation and configuration of MySQL can be completed through the following steps: 1. Download the installation package suitable for the operating system from the official website. 2. Run the installer, select the "Developer Default" option and set the root user password. 3. After installation, configure environment variables to ensure that the bin directory of MySQL is in PATH. 4. When creating a user, follow the principle of minimum permissions and set a strong password. 5. Adjust the innodb_buffer_pool_size and max_connections parameters when optimizing performance. 6. Back up the database regularly and optimize query statements to improve performance.

Comparison between Informix and MySQL on Linux

May 29, 2025 pm 11:21 PM

Comparison between Informix and MySQL on Linux

May 29, 2025 pm 11:21 PM

Informix and MySQL are both popular relational database management systems. They perform well in Linux environments and are widely used. The following is a comparison and analysis of the two on the Linux platform: Installing and configuring Informix: Deploying Informix on Linux requires downloading the corresponding installation files, and then completing the installation and configuration process according to the official documentation. MySQL: The installation process of MySQL is relatively simple, and can be easily installed through system package management tools (such as apt or yum), and there are a large number of tutorials and community support on the network for reference. Performance Informix: Informix has excellent performance and

Experience in participating in VSCode offline technology exchange activities

May 29, 2025 pm 10:00 PM

Experience in participating in VSCode offline technology exchange activities

May 29, 2025 pm 10:00 PM

I have a lot of experience in participating in VSCode offline technology exchange activities, and my main gains include sharing of plug-in development, practical demonstrations and communication with other developers. 1. Sharing of plug-in development: I learned how to use VSCode's plug-in API to improve development efficiency, such as automatic formatting and static analysis plug-ins. 2. Practical demonstration: I learned how to use VSCode for remote development and realized its flexibility and scalability. 3. Communicate with developers: I have obtained skills to optimize VSCode startup speed, such as reducing the number of plug-ins loaded at startup and managing the plug-in loading order. In short, this event has benefited me a lot and I highly recommend those who are interested in VSCode to participate.