Technology peripherals

Technology peripherals

AI

AI

CoCa: Contrastive Captioners are Image-Text Foundation Models Visually Explained

CoCa: Contrastive Captioners are Image-Text Foundation Models Visually Explained

CoCa: Contrastive Captioners are Image-Text Foundation Models Visually Explained

Mar 10, 2025 am 11:17 AMThis DataCamp community tutorial, edited for clarity and accuracy, explores image-text foundation models, focusing on the innovative Contrastive Captioner (CoCa) model. CoCa uniquely combines contrastive and generative learning objectives, integrating the strengths of models like CLIP and SIMVLM into a single architecture.

Foundation Models: A Deep Dive

Foundation models, pre-trained on massive datasets, are adaptable for various downstream tasks. While NLP has seen a surge in foundation models (GPT, BERT), vision and vision-language models are still evolving. Research has explored three primary approaches: single-encoder models, image-text dual-encoders with contrastive loss, and encoder-decoder models with generative objectives. Each approach has limitations.

Key Terms:

- Foundation Models: Pre-trained models adaptable for diverse applications.

- Contrastive Loss: A loss function comparing similar and dissimilar input pairs.

- Cross-Modal Interaction: Interaction between different data types (e.g., image and text).

- Encoder-Decoder Architecture: A neural network processing input and generating output.

- Zero-Shot Learning: Predicting on unseen data classes.

- CLIP: A contrastive language-image pre-training model.

- SIMVLM: A simple visual language model.

Model Comparisons:

- Single Encoder Models: Excel at vision tasks but struggle with vision-language tasks due to reliance on human annotations.

- Image-Text Dual-Encoder Models (CLIP, ALIGN): Excellent for zero-shot classification and image retrieval, but limited in tasks requiring fused image-text representations (e.g., Visual Question Answering).

- Generative Models (SIMVLM): Use cross-modal interaction for joint image-text representation, suitable for VQA and image captioning.

CoCa: Bridging the Gap

CoCa aims to unify the strengths of contrastive and generative approaches. It uses a contrastive loss to align image and text representations and a generative objective (captioning loss) to create a joint representation.

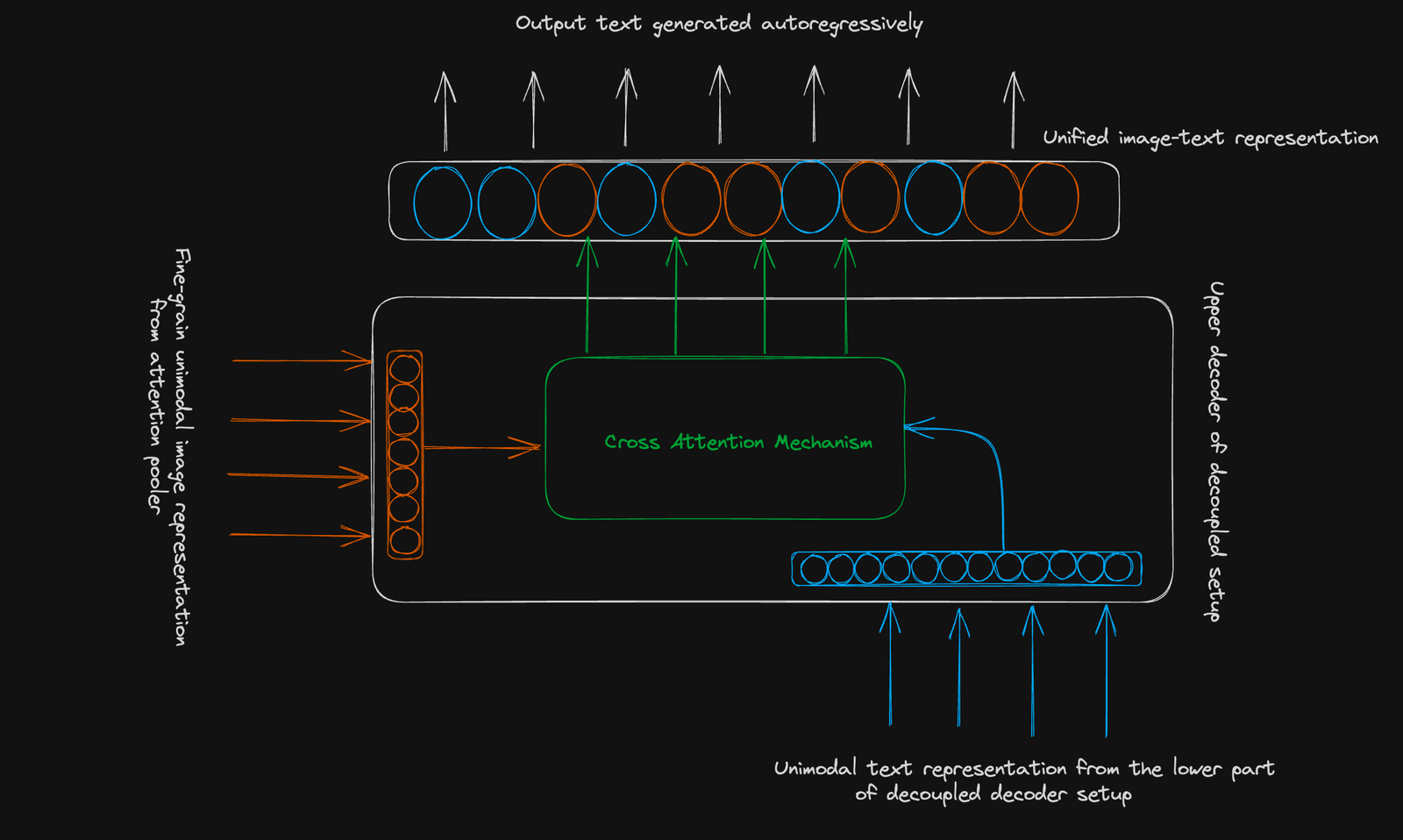

CoCa Architecture:

CoCa employs a standard encoder-decoder structure. Its innovation lies in a decoupled decoder:

- Lower Decoder: Generates a unimodal text representation for contrastive learning (using a [CLS] token).

- Upper Decoder: Generates a multimodal image-text representation for generative learning. Both decoders use causal masking.

Contrastive Objective: Learns to cluster related image-text pairs and separate unrelated ones in a shared vector space. A single pooled image embedding is used.

Generative Objective: Uses a fine-grained image representation (256-dimensional sequence) and cross-modal attention to predict text autoregressively.

Conclusion:

CoCa represents a significant advancement in image-text foundation models. Its combined approach enhances performance in various tasks, offering a versatile tool for downstream applications. To further your understanding of advanced deep learning concepts, consider DataCamp's Advanced Deep Learning with Keras course.

Further Reading:

- Learning Transferable Visual Models From Natural Language Supervision

- Image-Text Pre-training with Contrastive Captioners

The above is the detailed content of CoCa: Contrastive Captioners are Image-Text Foundation Models Visually Explained. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Top 7 NotebookLM Alternatives

Jun 17, 2025 pm 04:32 PM

Top 7 NotebookLM Alternatives

Jun 17, 2025 pm 04:32 PM

Google’s NotebookLM is a smart AI note-taking tool powered by Gemini 2.5, which excels at summarizing documents. However, it still has limitations in tool use, like source caps, cloud dependence, and the recent “Discover” feature

From Adoption To Advantage: 10 Trends Shaping Enterprise LLMs In 2025

Jun 20, 2025 am 11:13 AM

From Adoption To Advantage: 10 Trends Shaping Enterprise LLMs In 2025

Jun 20, 2025 am 11:13 AM

Here are ten compelling trends reshaping the enterprise AI landscape.Rising Financial Commitment to LLMsOrganizations are significantly increasing their investments in LLMs, with 72% expecting their spending to rise this year. Currently, nearly 40% a

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

Investing is booming, but capital alone isn’t enough. With valuations rising and distinctiveness fading, investors in AI-focused venture funds must make a key decision: Buy, build, or partner to gain an edge? Here’s how to evaluate each option—and pr

The Unstoppable Growth Of Generative AI (AI Outlook Part 1)

Jun 21, 2025 am 11:11 AM

The Unstoppable Growth Of Generative AI (AI Outlook Part 1)

Jun 21, 2025 am 11:11 AM

Disclosure: My company, Tirias Research, has consulted for IBM, Nvidia, and other companies mentioned in this article.Growth driversThe surge in generative AI adoption was more dramatic than even the most optimistic projections could predict. Then, a

These Startups Are Helping Businesses Show Up In AI Search Summaries

Jun 20, 2025 am 11:16 AM

These Startups Are Helping Businesses Show Up In AI Search Summaries

Jun 20, 2025 am 11:16 AM

Those days are numbered, thanks to AI. Search traffic for businesses like travel site Kayak and edtech company Chegg is declining, partly because 60% of searches on sites like Google aren’t resulting in users clicking any links, according to one stud

New Gallup Report: AI Culture Readiness Demands New Mindsets

Jun 19, 2025 am 11:16 AM

New Gallup Report: AI Culture Readiness Demands New Mindsets

Jun 19, 2025 am 11:16 AM

The gap between widespread adoption and emotional preparedness reveals something essential about how humans are engaging with their growing array of digital companions. We are entering a phase of coexistence where algorithms weave into our daily live

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). Heading Toward AGI And

Cisco Charts Its Agentic AI Journey At Cisco Live U.S. 2025

Jun 19, 2025 am 11:10 AM

Cisco Charts Its Agentic AI Journey At Cisco Live U.S. 2025

Jun 19, 2025 am 11:10 AM

Let’s take a closer look at what I found most significant — and how Cisco might build upon its current efforts to further realize its ambitions.(Note: Cisco is an advisory client of my firm, Moor Insights & Strategy.)Focusing On Agentic AI And Cu