Technology peripherals

Technology peripherals

AI

AI

Alibaba 7B multi-modal document understanding large model wins new SOTA

Alibaba 7B multi-modal document understanding large model wins new SOTA

Alibaba 7B multi-modal document understanding large model wins new SOTA

Apr 02, 2024 am 11:31 AMMultimodal document understandingAbility New SOTA!

The Alibaba mPLUG team released the latest open source workmPLUG-DocOwl 1.5, which proposed a solution for the four major challenges of high-resolution image text recognition, general document structure understanding, instruction compliance, and external knowledge introduction. series of solutions.

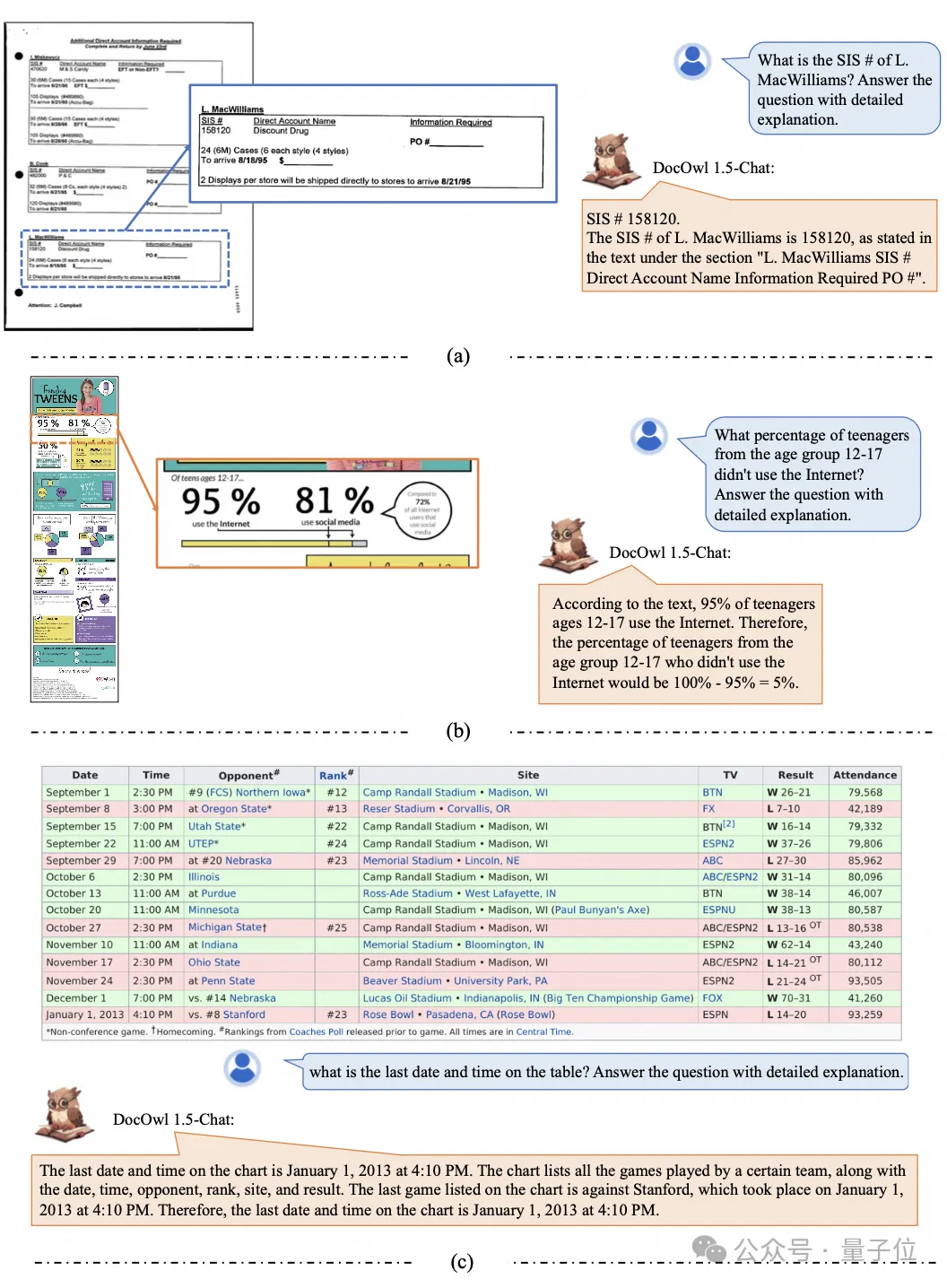

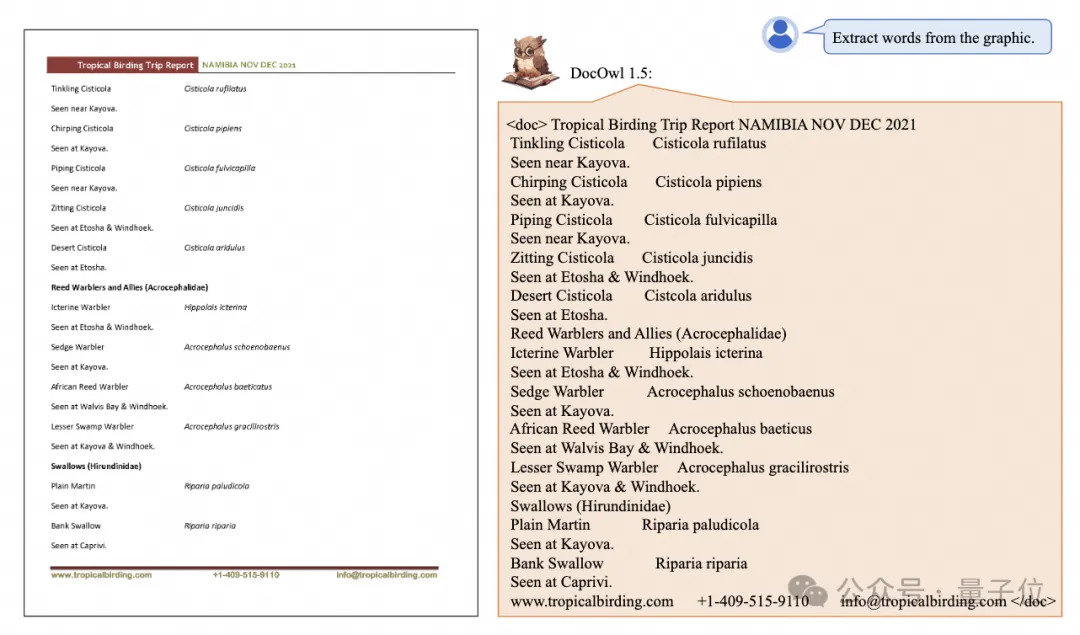

Without further ado, let’s look at the effects first.

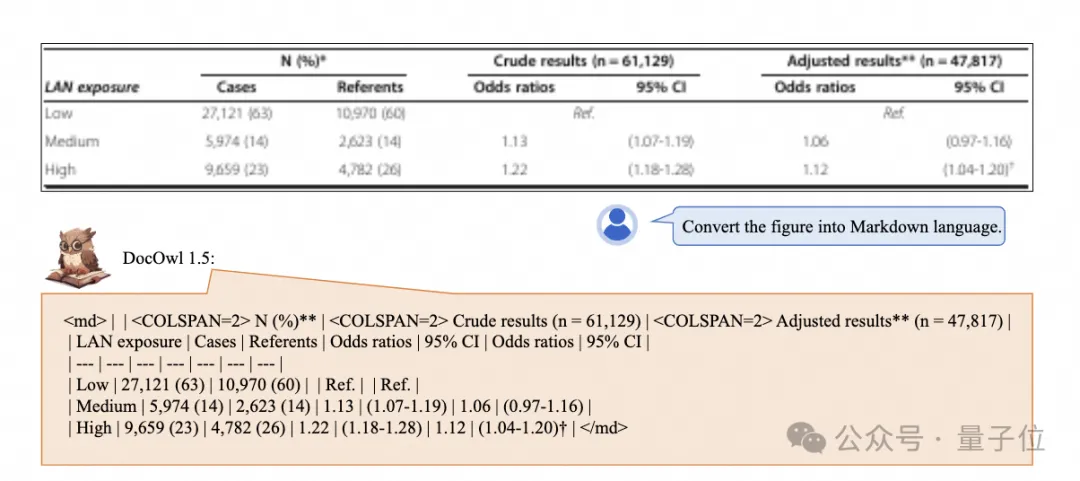

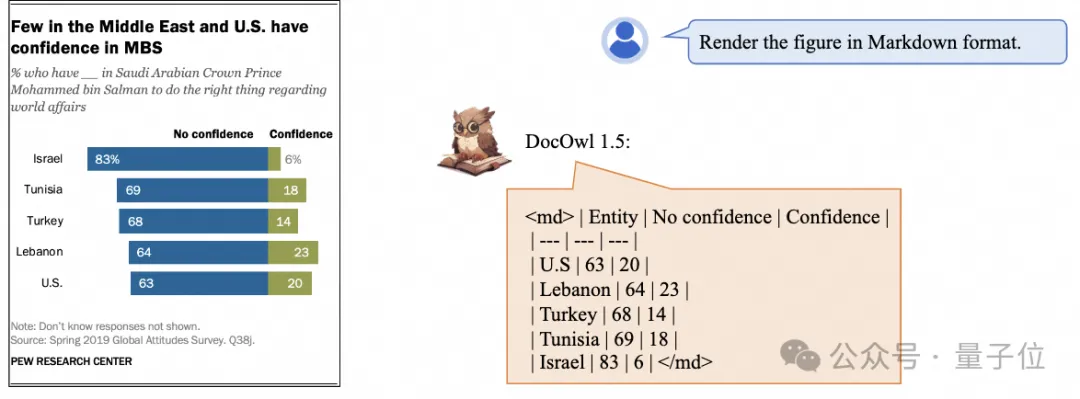

One-click recognition and conversion of charts with complex structures into Markdown format:

Charts of different styles are available:

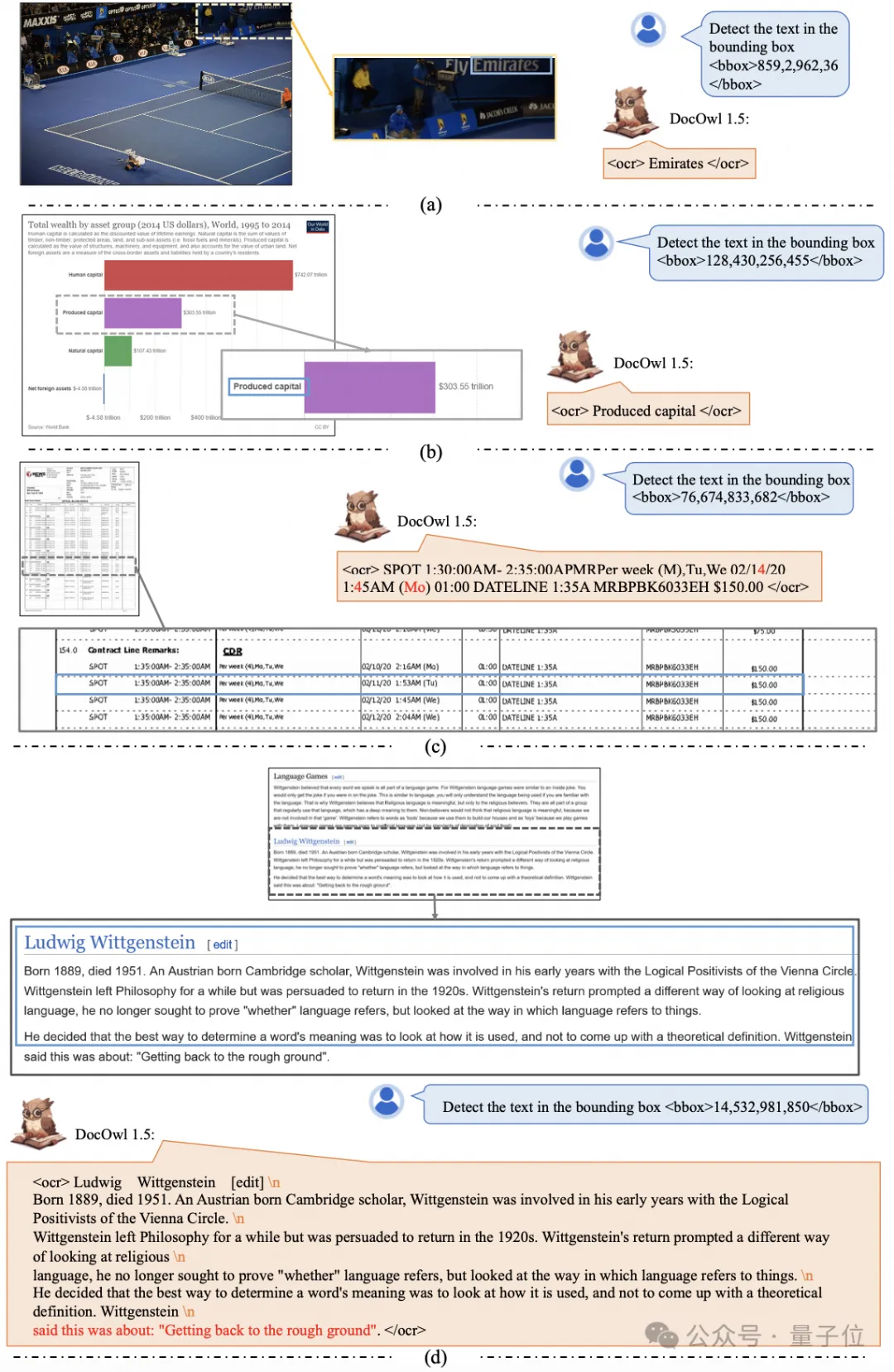

More detailed text recognition and positioning can also be easily accomplished:

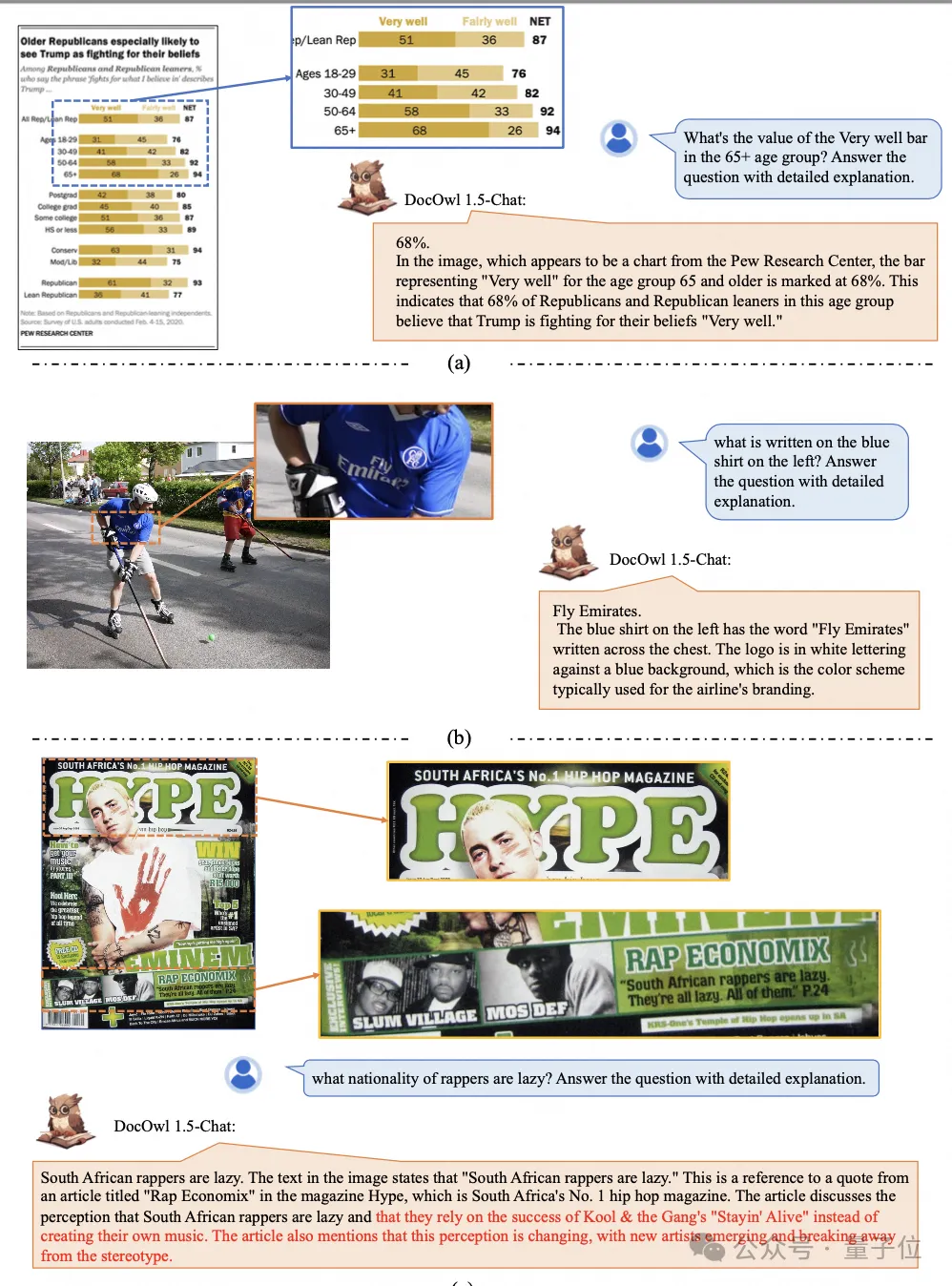

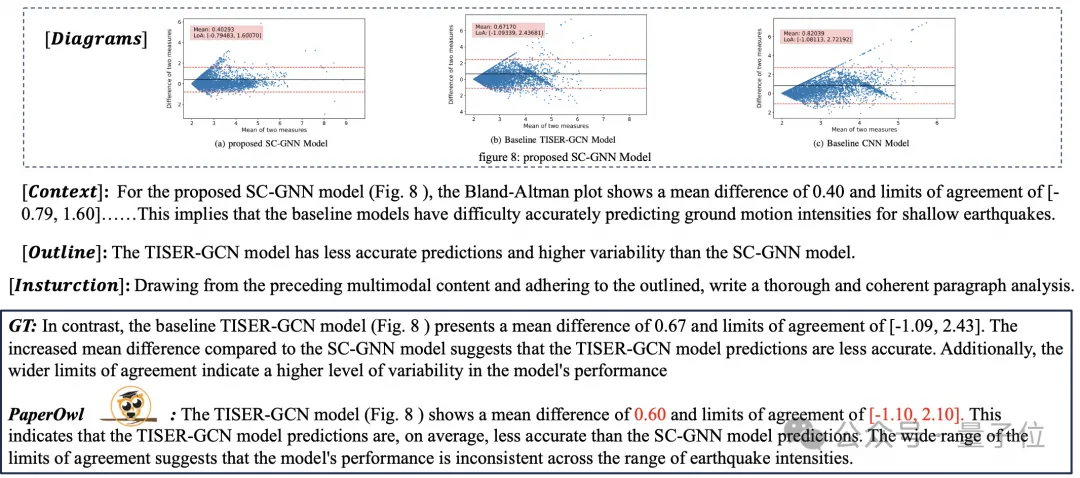

It can also provide detailed explanations for document understanding:

You must know that "document understanding" is currently an important scenario for the implementation of large language models. There are many products on the market to assist document reading, and some mainly use OCR systems for text recognition and cooperate with LLM for text recognition. Comprehension can achieve good document understanding ability.

However, due to the diverse categories of document pictures, rich text, and complex layout, it is difficult to achieve universal understanding of pictures with complex structures such as charts, infographics, and web pages.

The currently popular multi-modal large models QwenVL-Max, Gemini, Claude3, and GPT4V all have strong document image understanding capabilities. However, open source models have made slow progress in this direction.

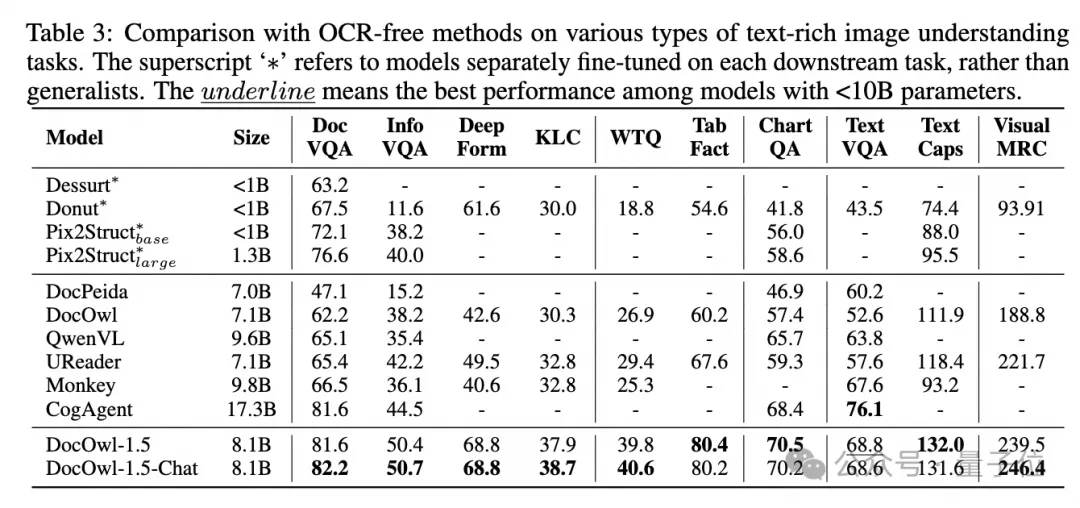

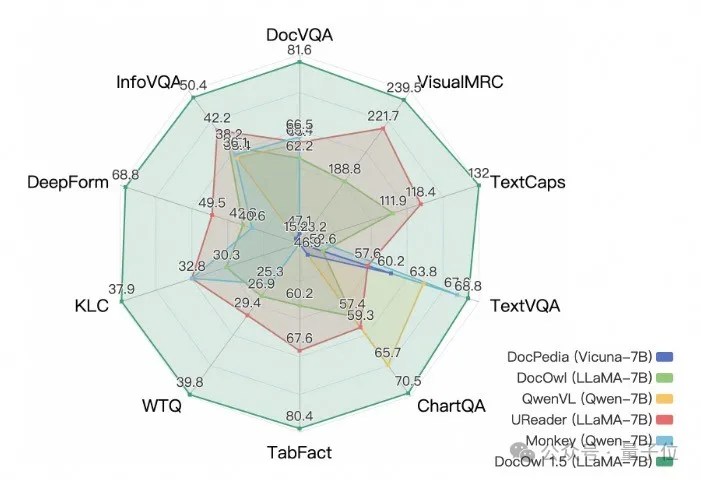

Alibaba’s new research mPLUG-DocOwl 1.5 won SOTA on 10 document understanding benchmarks, improved by more than 10 points on 5 data sets, and surpassed Wisdom’s 17.3B CogAgent on some data sets. In DocVQA Achieving an effect of 82.2.

In addition to the ability to answer simple questions on the baseline, DocOwl 1.5-Chat can also have the ability to fine-tune the data with a small amount of "detailed explanation" (reasoning) The ability to explain in detail in the field of multimodal documents has great application potential.

Alibaba’s mPLUG team began to invest in research on multi-modal document understanding in July 2023, and successively released mPLUG-DocOwl, UReader, mPLUG-PaperOwl, mPLUG-DocOwl 1.5, and open sourced a series of large document understanding models. and training data.

This article starts from the latest work mPLUG-DocOwl 1.5, analyzing the key challenges and effective solutions in the field of "multimodal document understanding".

Challenge 1: High-resolution image text recognition

Different from ordinary images, document images are characterized by diverse shapes and sizes, which can include A4-sized document images and short and wide tables. Pictures, long and narrow screenshots of mobile phone web pages, casually shot scene pictures, etc., the resolution distribution is very wide.

When mainstream multi-modal large models encode images, they often directly scale the image size. For example, mPLUG-Owl2 and QwenVL scale to 448x448, and LLaVA 1.5 scales to 336x336.

Simply scaling the document image will cause the text in the image to be blurred and deformed, making it unreadable.

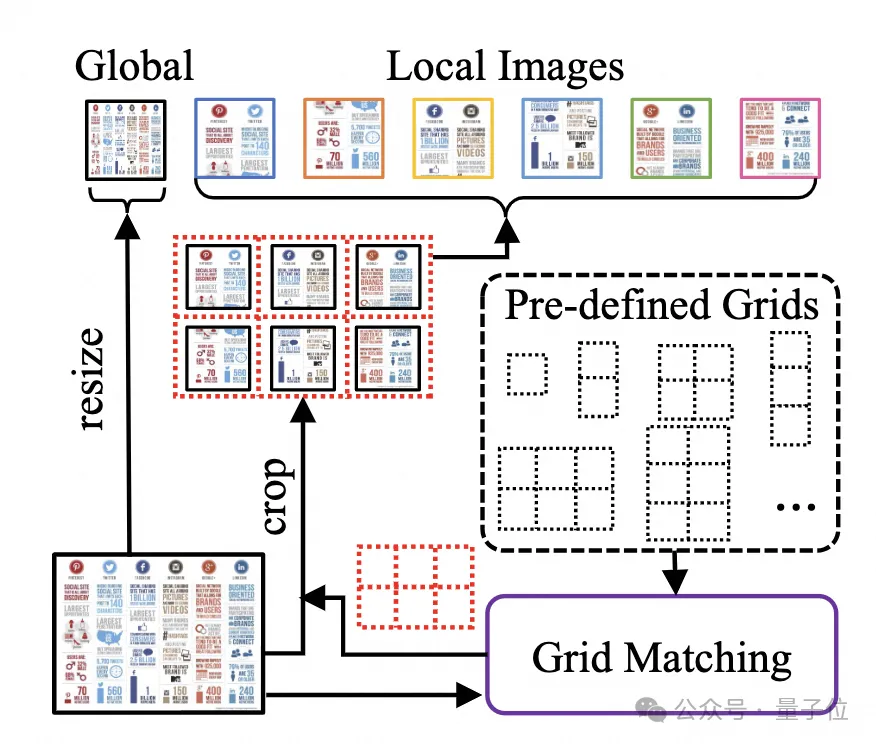

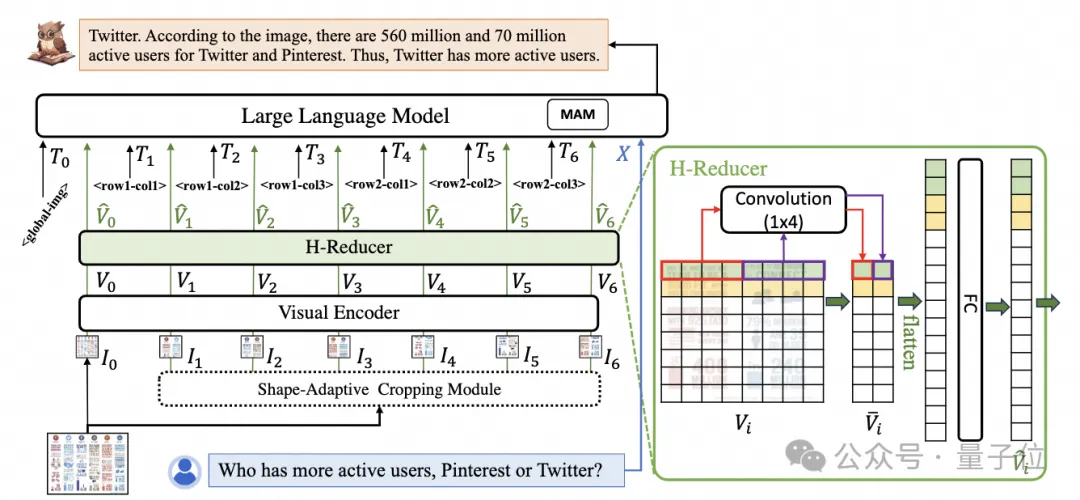

In order to process document images, mPLUG-DocOwl 1.5 continues the cutting method of its pre-process UReader. The model structure is shown in Figure 1:

△Figure 1: DocOwl 1.5 model structure diagram

UReader first proposed based on the existing multi-modal large model, adapting the shape cutting module without parameters(Shape -adaptive Cropping Module) Obtain a series of sub-pictures, each sub-picture is encoded through a low-resolution encoder, and finally the direct semantics of the sub-pictures are associated through a language model.

This graph cutting strategy can make maximum use of the ability of existing general-purpose visual encoders (such as CLIP ViT-14/L) for document understanding, greatly reducing the need to retrain high-resolution Rate visual encoder cost. The shape-adapted cutting module is shown in Figure 2:

△Figure 2: Shape-adaptive cutting module.

Challenge 2: General document structure understanding

For document understanding that does not rely on OCR systems, text recognition is a basic ability. It is very important to achieve semantic understanding and structural understanding of document content, such as To understand the content of the table, you need to understand the correspondence between table headers and rows and columns; to understand charts, you need to understand diverse structures such as line graphs, bar graphs, and pie charts; to understand contracts, you need to understand diverse key-value pairs such as date signatures.

mPLUG-DocOwl 1.5 focuses on solving general document and other structural understanding capabilities. Through the optimization of the model structure and the enhancement of training tasks, it has achieved significantly stronger general document understanding capabilities.

In terms of structure, as shown in Figure 1, mPLUG-DocOwl 1.5 abandons the visual language connection module of Abstractor in mPLUG-Owl/mPLUG-Owl2, adopts H based on "convolutional fully connected layer" -Reducer performs feature aggregation and feature alignment.

Compared with Abstractor based on learnable queries, H-Reducer retains the relative positional relationship between visual features and better transfers document structure information to the language model.

Compared with MLP that retains the length of the visual sequence, H-Reducer greatly reduces the number of visual features through convolution, allowing LLM to understand high-resolution document images more efficiently.

Considering that the text in most document images is arranged horizontally first, and the text semantics in the horizontal direction are coherent, the convolution shape and step size of 1x4 are used in H-Reducer. In the paper, the author proved through sufficient comparative experiments the superiority of H-Reducer in structural understanding and that 1x4 is a more general aggregate shape.

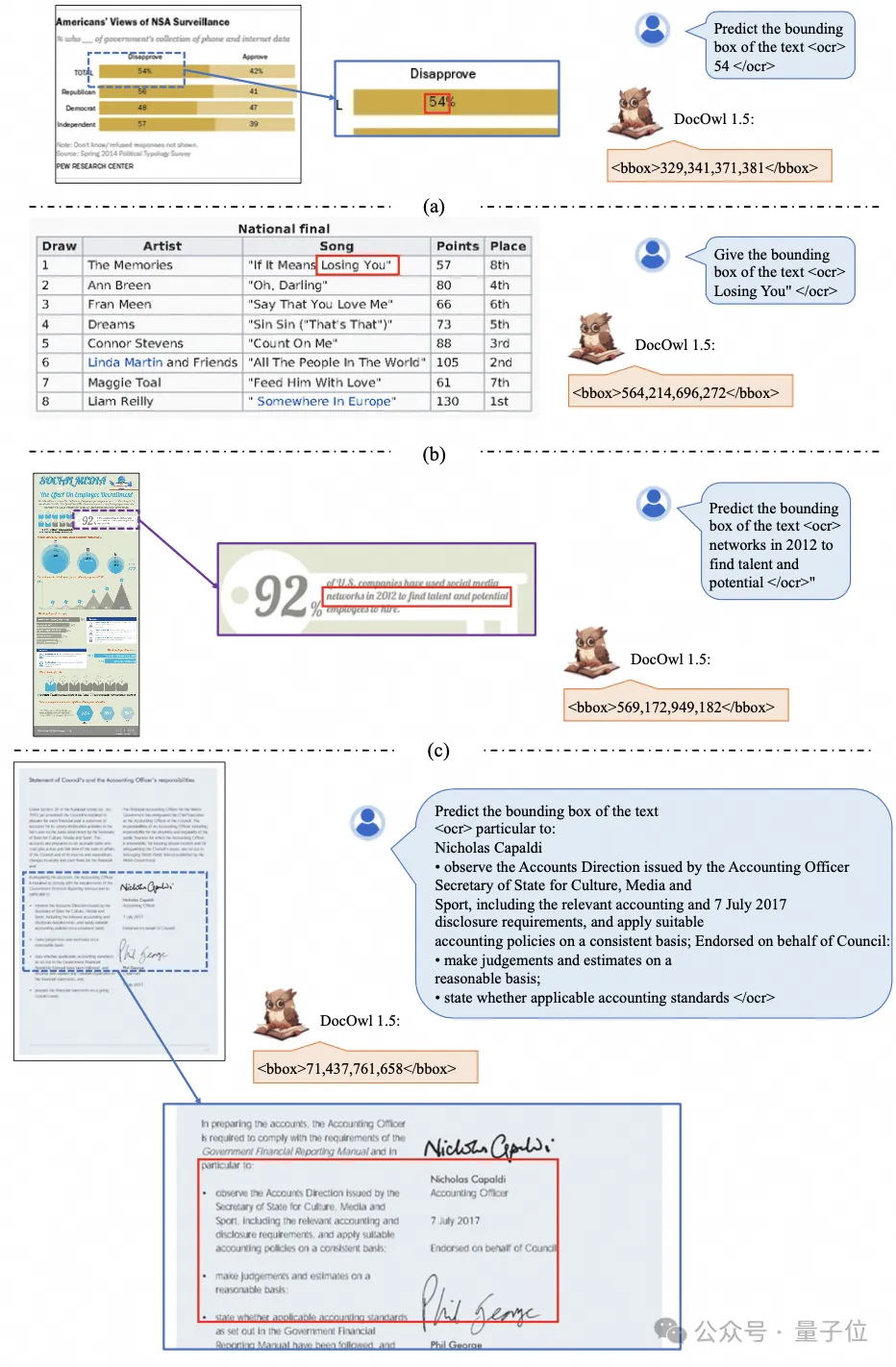

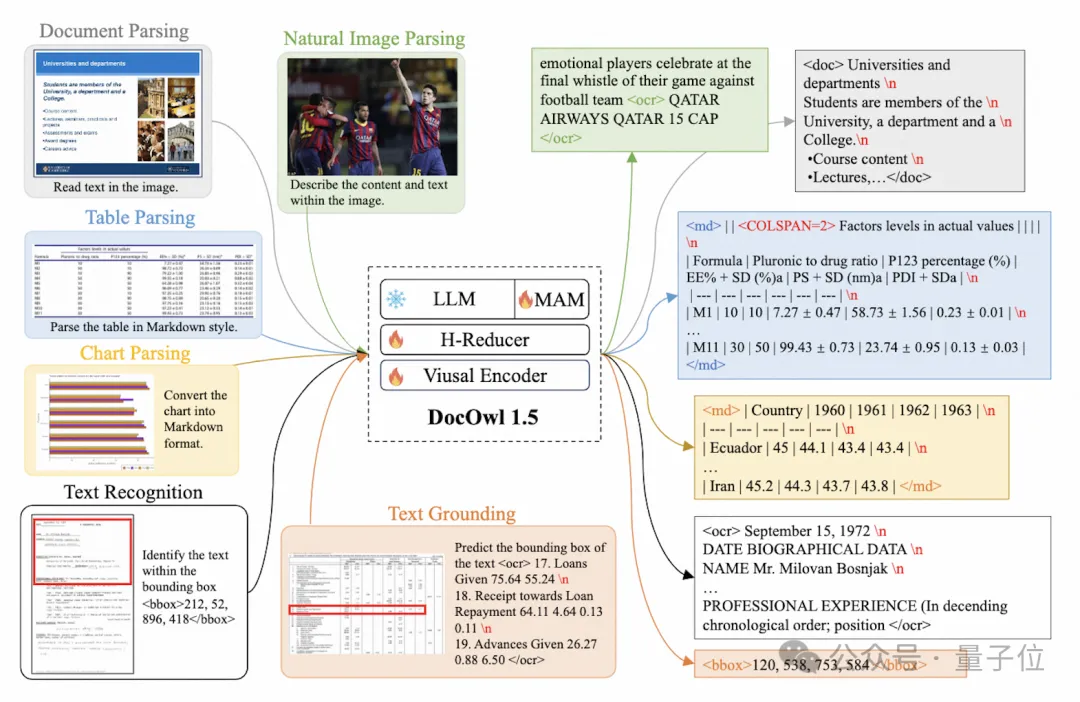

In terms of training tasks, mPLUG-DocOwl 1.5 designs a unified structure learning (Unified Structure Learning) task for all types of pictures, as shown in Figure 3.

△Figure 3: Unified Structure Learning

Unified Structure Learning includes not only global picture text analysis, but also multi-granularity text recognition and positioning .

In the global image text parsing task, for document images and web page images, spaces and line breaks can be used to most commonly represent the structure of text; for tables, the author introduces multi-line representation based on Markdown syntax. Special characters in multiple columns take into account the simplicity and versatility of table representation; for charts, considering that charts are visual presentations of tabular data, the author also uses tables in the form of Markdown as the analysis target of charts; for natural diagrams, semantic description and Scene text is equally important, so the form of picture description spliced ??with scene text is used as the analysis target.

In the "Text Recognition and Positioning" task, in order to better fit the understanding of document images, the author designed text recognition and positioning at four granularities of word, phrase, line, and block. The bounding box uses discretized integers. Numerical representation, range 0-999.

In order to support unified structure learning, the author constructed a comprehensive training setDocStruct4M, covering different types of images such as documents/web pages, tables, charts, and natural images.

After unified structure learning, DocOwl 1.5 has the ability to structurally analyze and text position document images in multiple fields.

△Figure 4: Structured text analysis

As shown in Figure 4 and Figure 5:

△Figure 5: Multi-granularity text recognition and positioning

Challenge 3: Instruction Following

"Instruction Following"(Instruction Following) Requires the model to be based on basic document understanding capabilities and perform different tasks according to user instructions, such as information extraction, question and answer, picture description, etc.

Continuing the practice of mPLUG-DocOwl, DocOwl 1.5 unifies multiple downstream tasks into the form of command question and answer. After unified structure learning, a document is obtained through multi-task joint training Domain general model(generalist).

In addition, in order to make the model have the ability to explain in detail, mPLUG-DocOwl has tried to introduce plain text instructions to fine-tune data for joint training, which has certain effects but is not ideal.

In DocOwl 1.5, the author built a small amount of detailed explanation data through GPT3.5 and GPT4V based on the problems of downstream tasks (DocReason25K) .

By combining document downstream tasks and DocReason25K for training, DocOwl 1.5-Chat can achieve better results on the benchmark:

△Figure 6: Document Understanding Benchmark The evaluation

can also give a detailed explanation:

△Figure 7: Detailed explanation of document understanding

Challenge 4: Introduction of external knowledge

Due to the richness of information in document pictures, additional knowledge is often required for understanding, such as professional terms and their meanings in special fields, etc.

In order to study how to introduce external knowledge for better document understanding, the mPLUG team started in the paper field and proposed mPLUG-PaperOwl, building a high-quality paper chart analysis data set M-Paper, involving 447k high-definition papers. chart.

This data provides context for the charts in the paper as an external source of knowledge, and designs "key points" (outline) as control signals for chart analysis to help the model better grasp User intent.

Based on UReader, the author fine-tuned mPLUG-PaperOwl on M-Paper, which demonstrated preliminary paper chart analysis capabilities, as shown in Figure 8.

△Figure 8: Paper chart analysis

mPLUG-PaperOwl is currently only an initial attempt to introduce external knowledge into document understanding, and still faces domain limitations, Problems such as a single source of knowledge need to be further resolved.

In general, this article starts from the recently released 7B most powerful multi-modal document understanding large model mPLUG-DocOwl 1.5, and summarizes the four key points for multi-modal document understanding without relying on OCR. Key challenges ("High-resolution image text recognition", "Universal document structure understanding", "Instruction following", "External knowledge introduction") and the solutions provided by Alibaba mPLUG team.

Although mPLUG-DocOwl 1.5 has greatly improved the document understanding performance of the open source model, there is still a large gap between it and the closed source large model and real needs, in terms of text recognition, mathematical calculation, general purpose, etc. in natural scenes. There is still room for improvement.

The mPLUG team will further optimize the performance of DocOwl and open source it. Everyone is welcome to continue to pay attention and have friendly discussions!

GitHub link: https://github.com/X-PLUG/mPLUG-DocOwl

Paper link: https://arxiv.org/abs/2403.12895

The above is the detailed content of Alibaba 7B multi-modal document understanding large model wins new SOTA. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

What is Ethereum? What are the ways to obtain Ethereum ETH?

Jul 31, 2025 pm 11:00 PM

What is Ethereum? What are the ways to obtain Ethereum ETH?

Jul 31, 2025 pm 11:00 PM

Ethereum is a decentralized application platform based on smart contracts, and its native token ETH can be obtained in a variety of ways. 1. Register an account through centralized platforms such as Binance and Ouyiok, complete KYC certification and purchase ETH with stablecoins; 2. Connect to digital storage through decentralized platforms, and directly exchange ETH with stablecoins or other tokens; 3. Participate in network pledge, and you can choose independent pledge (requires 32 ETH), liquid pledge services or one-click pledge on the centralized platform to obtain rewards; 4. Earn ETH by providing services to Web3 projects, completing tasks or obtaining airdrops. It is recommended that beginners start from mainstream centralized platforms, gradually transition to decentralized methods, and always attach importance to asset security and independent research, to

How to choose a free market website in the currency circle? The most comprehensive review in 2025

Jul 29, 2025 pm 06:36 PM

How to choose a free market website in the currency circle? The most comprehensive review in 2025

Jul 29, 2025 pm 06:36 PM

The most suitable tools for querying stablecoin markets in 2025 are: 1. Binance, with authoritative data and rich trading pairs, and integrated TradingView charts suitable for technical analysis; 2. Ouyi, with clear interface and strong functional integration, and supports one-stop operation of Web3 accounts and DeFi; 3. CoinMarketCap, with many currencies, and the stablecoin sector can view market value rankings and deans; 4. CoinGecko, with comprehensive data dimensions, provides trust scores and community activity indicators, and has a neutral position; 5. Huobi (HTX), with stable market conditions and friendly operations, suitable for mainstream asset inquiries; 6. Gate.io, with the fastest collection of new coins and niche currencies, and is the first choice for projects to explore potential; 7. Tra

Ethena treasury strategy: the rise of the third empire of stablecoin

Jul 30, 2025 pm 08:12 PM

Ethena treasury strategy: the rise of the third empire of stablecoin

Jul 30, 2025 pm 08:12 PM

The real use of battle royale in the dual currency system has not yet happened. Conclusion In August 2023, the MakerDAO ecological lending protocol Spark gave an annualized return of $DAI8%. Then Sun Chi entered in batches, investing a total of 230,000 $stETH, accounting for more than 15% of Spark's deposits, forcing MakerDAO to make an emergency proposal to lower the interest rate to 5%. MakerDAO's original intention was to "subsidize" the usage rate of $DAI, almost becoming Justin Sun's Solo Yield. July 2025, Ethe

What is Binance Treehouse (TREE Coin)? Overview of the upcoming Treehouse project, analysis of token economy and future development

Jul 30, 2025 pm 10:03 PM

What is Binance Treehouse (TREE Coin)? Overview of the upcoming Treehouse project, analysis of token economy and future development

Jul 30, 2025 pm 10:03 PM

What is Treehouse(TREE)? How does Treehouse (TREE) work? Treehouse Products tETHDOR - Decentralized Quotation Rate GoNuts Points System Treehouse Highlights TREE Tokens and Token Economics Overview of the Third Quarter of 2025 Roadmap Development Team, Investors and Partners Treehouse Founding Team Investment Fund Partner Summary As DeFi continues to expand, the demand for fixed income products is growing, and its role is similar to the role of bonds in traditional financial markets. However, building on blockchain

Ethereum (ETH) NFT sold nearly $160 million in seven days, and lenders launched unsecured crypto loans with World ID

Jul 30, 2025 pm 10:06 PM

Ethereum (ETH) NFT sold nearly $160 million in seven days, and lenders launched unsecured crypto loans with World ID

Jul 30, 2025 pm 10:06 PM

Table of Contents Crypto Market Panoramic Nugget Popular Token VINEVine (114.79%, Circular Market Value of US$144 million) ZORAZora (16.46%, Circular Market Value of US$290 million) NAVXNAVIProtocol (10.36%, Circular Market Value of US$35.7624 million) Alpha interprets the NFT sales on Ethereum chain in the past seven days, and CryptoPunks ranked first in the decentralized prover network Succinct launched the Succinct Foundation, which may be the token TGE

Solana and the founders of Base Coin start a debate: the content on Zora has 'basic value'

Jul 30, 2025 pm 09:24 PM

Solana and the founders of Base Coin start a debate: the content on Zora has 'basic value'

Jul 30, 2025 pm 09:24 PM

A verbal battle about the value of "creator tokens" swept across the crypto social circle. Base and Solana's two major public chain helmsmans had a rare head-on confrontation, and a fierce debate around ZORA and Pump.fun instantly ignited the discussion craze on CryptoTwitter. Where did this gunpowder-filled confrontation come from? Let's find out. Controversy broke out: The fuse of Sterling Crispin's attack on Zora was DelComplex researcher Sterling Crispin publicly bombarded Zora on social platforms. Zora is a social protocol on the Base chain, focusing on tokenizing user homepage and content

What is Zircuit (ZRC currency)? How to operate? ZRC project overview, token economy and prospect analysis

Jul 30, 2025 pm 09:15 PM

What is Zircuit (ZRC currency)? How to operate? ZRC project overview, token economy and prospect analysis

Jul 30, 2025 pm 09:15 PM

Directory What is Zircuit How to operate Zircuit Main features of Zircuit Hybrid architecture AI security EVM compatibility security Native bridge Zircuit points Zircuit staking What is Zircuit Token (ZRC) Zircuit (ZRC) Coin Price Prediction How to buy ZRC Coin? Conclusion In recent years, the niche market of the Layer2 blockchain platform that provides services to the Ethereum (ETH) Layer1 network has flourished, mainly due to network congestion, high handling fees and poor scalability. Many of these platforms use up-volume technology, multiple transaction batches processed off-chain

Top 10 AI concept coins worth paying attention to in 2025 What are the AI concept coins worth paying attention to in 2025

Jul 29, 2025 pm 06:06 PM

Top 10 AI concept coins worth paying attention to in 2025 What are the AI concept coins worth paying attention to in 2025

Jul 29, 2025 pm 06:06 PM

The top ten potential AI concept coins in 2025 include: 1. Render (RNDR) as a decentralized GPU rendering network, providing AI with key computing power infrastructure; 2. Fetch.ai (FET) builds an intelligent economy through autonomous economic agents and participates in the formation of the "Artificial Intelligence Super Alliance" (ASI); 3. SingularityNET (AGIX) builds a decentralized AI service market, promotes the development of general artificial intelligence, and is a core member of ASI; 4. Ocean Protocol (OCEAN) solves data silos and privacy issues, provides secure data transactions and "Compute-to-Data" technology to support the AI data economy; 5.