Database

Database

Redis

Redis

Let's analyze Redis cache consistency, cache penetration, cache breakdown and cache avalanche issues together

Let's analyze Redis cache consistency, cache penetration, cache breakdown and cache avalanche issues together

Let's analyze Redis cache consistency, cache penetration, cache breakdown and cache avalanche issues together

May 19, 2022 am 10:12 AMThis article brings you relevant knowledge about Redis, which mainly introduces the issues related to cache consistency, cache penetration, cache breakdown, cache avalanche and write synchronization of cached data. Let’s take a look at the issue of DB consistency. I hope it will be helpful to everyone.

Related recommendations: "Analysis of hot key storage problems in Redis and talk about solutions to cache exceptions"

(1) Cache invalidation consistency problem

The general way to use cache is: read the cache first, and if it does not exist, read it from the DB, and then The results are written to the cache; the next time the data is read, the data can be obtained directly from the cache. [Related recommendations: Redis Video Tutorial]

Data modification is to directly invalidate the cached data, and then modify the DB content to avoid that the DB modification is successful, but the cached data is not cleared due to network or other problems. , resulting in dirty data. But this still cannot avoid the generation of dirty data. In a concurrent scenario: Assume that the business has a large number of read and modify requests for the data Key:Hello Value:World. Thread A reads Key:Hello from OCS, gets the Not Found result, starts requesting data from DB, and gets the data Key:Hello Value:World; next, it prepares to write this data to OCS, but before writing to OCS (network, Waiting for the CPU may cause the processing speed of thread A to slow down.) Another thread B requests to modify the data Key:Hello Value:OCS and first performs the invalidation cache action (because thread B does not know whether this data exists, so it directly performs the invalidation operation). OCS successfully processed the invalid request. Return to thread A to continue writing OCS and write Key:Hello Value:World into the cache. Thread A's task ends; thread B also successfully modified the DB data content to Key:Hello Value:OCS. In order to solve this problem, OCS has expanded the Memcached protocol (public cloud will soon support it) and added the deleteAndIncVersion interface. This interface does not actually delete the data, but labels the data to indicate that it has expired, and increases the data version number; if the data does not exist, NULL is written, and a random data version number is also generated. OCS writing supports atomic comparison of version numbers: assuming the incoming version number is consistent with the data version number saved by OCS or the original data does not exist, writing is allowed, otherwise modification is refused.

Back to the scene just now: Thread A reads Key:Hello from OCS, gets the Not Found result, starts requesting data from DB, and gets the data Key:Hello Value:World; then prepares to write to OCS For this piece of data, the version number information defaults to 1; before A writes to OCS, another thread B initiates an action to modify the data Key:Hello Value:OCS. It first performs the delete cache action. OCS successfully handles the deleteAndIncVersion request and generates a random version. No. 12345 (agreed to be greater than 1000). Return to thread A and continue writing to OCS, requesting to write Key:Hello Value:World. At this time, the cache system finds that the incoming version number information does not match (1! = 12345), the writing fails, and the task of thread A ends. ;Thread B also successfully modified the DB data content to Key:Hello Value:OCS.

At this time, the data in OCS is Key:Hello Value:NULL Version:12345; the data in DB is Key:Hello Value:OCS. In subsequent read tasks, the data in DB will be tried again to write to in OCS.

(2) The write synchronization of cached data and the consistency problem with DB

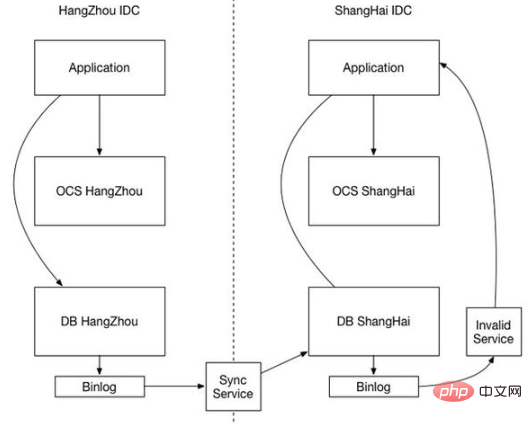

As the website scale grows and reliability improves, it will face the deployment of multiple IDCs. Each IDC has an independent DB and cache system, and cache consistency has become a prominent issue.

First of all, in order to ensure high efficiency, the cache system will prevent disk IO, even if it is writing BINLOG; of course, for the sake of performance, the cache system can only delete synchronously and not write synchronously, so the cache synchronization will generally take precedence over the DB synchronization arrival (After all, the cache system is much more efficient), then there will be a scenario where there is no data in the cache and old data in the DB. At this time, there is a business request for data, and the read cache is Not Found. The old data read from the DB and loaded into the cache is still old data. When the DB data synchronization arrives, only the DB is updated, and the cached dirty data cannot be cleared.

As can be seen from the above situation, the root cause of the inconsistency is that heterogeneous systems cannot synchronize collaboratively. It cannot guarantee that DB data is synchronized first and cached data is synchronized later. So we need to consider how the cache system waits for DB synchronization, or can the two share a synchronization mechanism? Cache synchronization also relies on DB BINLOG which is a feasible solution.

The DB in IDC1 is synchronized to the DB in IDC2 through BINLOG. In this case, IDC2-DB data modification will also generate its own BINLOG. The cached data synchronization can be performed through IDC2-DB BINLOG. After the cache synchronization module analyzes the BINLOG, it invalidates the corresponding cache key and changes the synchronization from parallel to serial, ensuring the order.

(3) Cache penetration (DB suffered unnecessary query traffic)

Method 1: It is a Bloom filter. It is an extremely space-efficient probabilistic algorithm and data structure, used to determine whether an element is in a set (similar to Hashset). Its core is a long binary vector and a series of hash functions. Implement bloom filter using Google's guava. 1) There is a miscalculation rate. As the number of stored elements increases, the miscalculation rate also increases. 2) Under normal circumstances, elements cannot be deleted from the Bloom filter. 3) The process of determining the array length and the number of hash functions is complex, and the distribution What are the usage scenarios of Long filter? 1) Spam address filtering (the number of addresses is huge) 2) Crawler URL address deduplication 3) Solve the cache breakdown problem

Method 2: Store empty results and set the time for empty results

(4) Cache avalanche (the cache is set to the same expiration time, causing a DB flood)

Method 1: Most system designers consider using locks or queues to ensure cache single Threads (processes) write to avoid a large number of concurrent requests falling on the underlying storage system when they fail

Method 2: Random value of failure time

(5) Cache breakdown (hot spot) Key, a small avalanche caused by a large number of concurrent read requests)

When the cache expires at a certain point in time, there happens to be a large number of concurrent requests for this Key at this point in time. These requests If the cache is found to have expired, the data will usually be loaded from the back-end DB and reset to the cache. At this time, large concurrent requests may instantly overwhelm the back-end DB

Method 1: 1. Use distributed cache For the supported mutex key, set a mutex key. When the operation returns successfully, the load DB operation is performed and the cache is set back. That is, load DB will only be processed by one thread.

Method 2: Use mutex key in advance: Set a timeout value (timeout1) inside value, timeout1 is smaller than the actual memcache timeout (timeout2). When timeout1 is read from cache When you find that it has expired, immediately extend timeout1 and reset it to the cache. Then load the data from the database and set it to the cache. This increases the intrusion of business code and increases the complexity of coding

Method 3 : "Never expires": From the perspective of redis, there is indeed no expiration time set, which ensures that there will be no hotspot key expiration problem, that is, "physical" does not expire. From a functional point of view, if it does not expire, then it does not Is it static? So we store the expiration time in the value corresponding to the key. If it is found that it is about to expire, the cache is constructed through a background asynchronous thread, which is a "logical" expiration

(6) Common cache full and data loss problems in cache systems

Need to be based on specific business analysis. Usually we use the LRU strategy to handle overflow, and Redis's RDB and AOF persistence strategies to ensure certain situations. Data security under.

For more programming-related knowledge, please visit:Programming Video!!

The above is the detailed content of Let's analyze Redis cache consistency, cache penetration, cache breakdown and cache avalanche issues together. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Laravel8 optimization points

Apr 18, 2025 pm 12:24 PM

Laravel8 optimization points

Apr 18, 2025 pm 12:24 PM

Laravel 8 provides the following options for performance optimization: Cache configuration: Use Redis to cache drivers, cache facades, cache views, and page snippets. Database optimization: establish indexing, use query scope, and use Eloquent relationships. JavaScript and CSS optimization: Use version control, merge and shrink assets, use CDN. Code optimization: Use Composer installation package, use Laravel helper functions, and follow PSR standards. Monitoring and analysis: Use Laravel Scout, use Telescope, monitor application metrics.

How to use the Redis cache solution to efficiently realize the requirements of product ranking list?

Apr 19, 2025 pm 11:36 PM

How to use the Redis cache solution to efficiently realize the requirements of product ranking list?

Apr 19, 2025 pm 11:36 PM

How does the Redis caching solution realize the requirements of product ranking list? During the development process, we often need to deal with the requirements of rankings, such as displaying a...

What should I do if the Redis cache of OAuth2Authorization object fails in Spring Boot?

Apr 19, 2025 pm 08:03 PM

What should I do if the Redis cache of OAuth2Authorization object fails in Spring Boot?

Apr 19, 2025 pm 08:03 PM

In SpringBoot, use Redis to cache OAuth2Authorization object. In SpringBoot application, use SpringSecurityOAuth2AuthorizationServer...

Recommended Laravel's best expansion packs: 2024 essential tools

Apr 30, 2025 pm 02:18 PM

Recommended Laravel's best expansion packs: 2024 essential tools

Apr 30, 2025 pm 02:18 PM

The essential Laravel extension packages for 2024 include: 1. LaravelDebugbar, used to monitor and debug code; 2. LaravelTelescope, providing detailed application monitoring; 3. LaravelHorizon, managing Redis queue tasks. These expansion packs can improve development efficiency and application performance.

Laravel environment construction and basic configuration (Windows/Mac/Linux)

Apr 30, 2025 pm 02:27 PM

Laravel environment construction and basic configuration (Windows/Mac/Linux)

Apr 30, 2025 pm 02:27 PM

The steps to build a Laravel environment on different operating systems are as follows: 1.Windows: Use XAMPP to install PHP and Composer, configure environment variables, and install Laravel. 2.Mac: Use Homebrew to install PHP and Composer and install Laravel. 3.Linux: Use Ubuntu to update the system, install PHP and Composer, and install Laravel. The specific commands and paths of each system are different, but the core steps are consistent to ensure the smooth construction of the Laravel development environment.

Redis's Role: Exploring the Data Storage and Management Capabilities

Apr 22, 2025 am 12:10 AM

Redis's Role: Exploring the Data Storage and Management Capabilities

Apr 22, 2025 am 12:10 AM

Redis plays a key role in data storage and management, and has become the core of modern applications through its multiple data structures and persistence mechanisms. 1) Redis supports data structures such as strings, lists, collections, ordered collections and hash tables, and is suitable for cache and complex business logic. 2) Through two persistence methods, RDB and AOF, Redis ensures reliable storage and rapid recovery of data.

How to configure slow query log in centos redis

Apr 14, 2025 pm 04:54 PM

How to configure slow query log in centos redis

Apr 14, 2025 pm 04:54 PM

Enable Redis slow query logs on CentOS system to improve performance diagnostic efficiency. The following steps will guide you through the configuration: Step 1: Locate and edit the Redis configuration file First, find the Redis configuration file, usually located in /etc/redis/redis.conf. Open the configuration file with the following command: sudovi/etc/redis/redis.conf Step 2: Adjust the slow query log parameters in the configuration file, find and modify the following parameters: #slow query threshold (ms)slowlog-log-slower-than10000#Maximum number of entries for slow query log slowlog-max-len

In a multi-node environment, how to ensure that Spring Boot's @Scheduled timing task is executed only on one node?

Apr 19, 2025 pm 10:57 PM

In a multi-node environment, how to ensure that Spring Boot's @Scheduled timing task is executed only on one node?

Apr 19, 2025 pm 10:57 PM

The optimization solution for SpringBoot timing tasks in a multi-node environment is developing Spring...