Have you ever tried to build your own Large Language Model (LLM) application? Ever wondered how people are making their own LLM application to increase their productivity? LLM applications have proven to be useful in every aspect. Building an LLM app is now within everyone’s reach. Thanks to the availability of AI models as well as powerful frameworks. In this tutorial, we will be building our first LLM application in the easiest way possible. Let’s begin the process. We will delve into each process from idea to code to deployment one at a time.

Table of contents

- Why LLM apps matter?

- Key Components of an LLM Application

- Choosing the Right Tools

- Step by Step Implementation?

- 1. Setting up Python and its environment

- 2. Installing required dependencies

- 3. Importing all the dependencies

- 4. Environment Setup

- 5. Agent Setup

- 6. Streamlit UI

- 7. Running the application

- Conclusion

- Frequently Asked Questions?

Why LLM apps matter?

LLM applications are unique in that they use natural language to process the user and also respond in natural language. Moreover, LLM apps are aware of the context of the user query and answer it accordingly. Common use cases of LLM applications are chatbots, content generation, and Q&A agents. It impacts the user experience significantly by incorporating conversational AI, the driver of today’s AI landscape.

Key Components of an LLM Application

Creating an LLM application involves steps in which we create different components of the LLM application. In the end, we use these components to build a full-fledged application. Let’s learn about them one by one to get a complete understanding of each component thoroughly.

- Foundational Model: This involves choosing your foundational AI model or LLM that you will be using in your application in the backend. Consider this as the brain of your application.

- Prompt Engineering: This is the most important component to give your LLM context about your application. This includes defining the tone, personality, and persona of your LLM so that it can answer accordingly.

- Orchestration Layer: Frameworks like Langchain, LlamaIndex act as the orchestration layer, which handles all your LLM calls and outputs to your application. These frameworks bind your application with LLM so that you can access AI models easily.

- Tools: Tools act as the most important component while building your LLM app. These tools are often used by LLMs to perform tasks that AI models are not capable of doing directly.?

Choosing the Right Tools

Selecting the right tools is one of the most important tasks for creating an LLM application. People often skip this part of the process and start to build an LLM application from scratch using any available tools. This approach is highly vague. One should define tools efficiently before going into the development phase. Let’ define our tools.

- Choosing an LLM: An LLM acts as the mind behind your application. Choosing the right LLM is a crucial step, keeping cost and availability parameters in mind. You can use LLMs from OpenAI, Groq, and Google. You have to collect an API key from their platform to use these LLMs.

- Frameworks: The frameworks act as the integration between your application and the LLM. It helps us in simplifying prompts to the LLM, chaining logic that defines the workflow of the application. There are frameworks like Langchain and LlamaIndex that are widely used for creating an LLM application. Langchain is considered the most beginner-friendly and easiest to use.

- Front-end Libraries: Python offers good support for building front-end for your applications in the minimal code possible. Libraries such as Streamlit, Gradio, and Chainlit have capabilities to give your LLM application a beautiful front end with minimal code required.

Step by Step Implementation

We have covered all the basic prerequisites for building our LLM application. Let’s move towards the actual implementation and write the code for developing the LLM application from scratch. In this guide, we will be creating an LLM application that takes in a query as input, breaks the query into sub-parts, searches the internet, and then compiles the result into a good-looking markdown report with the references used.

1. Setting up Python and its environment

The first step is to download the Python interpreter from its official website and install it on your system. Don’t forget to tick/select the Add PATH VARIABLE to the system option while installing.

Also, confirm that you’ve installed Python by typing python in the command line.

2. Installing required dependencies

This step installs the library dependencies into your system. Open your terminal and type in the following command to install the dependencies.

pip install streamlit dotenv langchain langchain-openai langchain-community langchain-core

This command will run the terminal and install all dependencies for running our application.

3. Importing all the dependencies

After installing the dependencies, head over to an IDE code editor, such as VS Code, and open it in the required path. Now, create a Python file “app.py” and paste the following import statements inside the file

import streamlit as st import os from dotenv import load_dotenv from langchain_openai import ChatOpenAI from langchain_community.tools.tavily_search import TavilySearchResults from langchain.agents import AgentExecutor, create_tool_calling_agent from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder from langchain_core.messages import AIMessage, HumanMessage

4. Environment Setup

We create some environment variables for our LLM and other tools. For this, create a file “.env” in the same directory and paste API keys inside it using the environment variables. For example, in our LLM application, we will be using two API keys: an OpenAI API key for our LLM, which can be accessed from here, and a Tavily API key, which will be used to search the internet in real-time, which can be accessed from here.

OPENAI_API_KEY="Your_API_Key" TAVILY_API_KEY="Your_API_Key"

Now, in your app.py, write the following piece of code. This code will load all the available environment variables directly into your working environment.

# --- ENVIRONMENT SETUP ---

load_dotenv()

OPENAI_API_KEY = os.getenv("OPENAI_API_KEY")

TAVILY_API_KEY = os.getenv("TAVILY_API_KEY")

if not OPENAI_API_KEY:

???st.error("? OpenAI API key not found. Please set it in your .env file (OPENAI_API_KEY='sk-...')")

if not TAVILY_API_KEY:

???st.error("? Tavily API key not found. Please set it in your .env file (TAVILY_API_KEY='tvly-...')")

if not OPENAI_API_KEY or not TAVILY_API_KEY:

???st.stop()

5. Agent Setup

As we have loaded all the environment variables. Let’s create the agentic workflow that every query would travel through while using the LLM application. Here we will be creating a tool i.e, Tavily search, that will search the internet. An Agent Executor that will execute the agent with tools.

# --- AGENT SETUP ---

@st.cache_resource

def get_agent_executor():

???"""

???Initializes and returns the LangChain agent executor.

???"""

???# 1. Define the LLM

???llm = ChatOpenAI(model="gpt-4o-mini", temperature=0.2, api_key=OPENAI_API_KEY)

???# 2. Define Tools (simplified declaration)

???tools = [

???????TavilySearchResults(

???????????max_results=7,

???????????name="web_search",

???????????api_key=TAVILY_API_KEY,

???????????description="Performs web searches to find current information"

???????)

???]

???# 3. Updated Prompt Template (v0.3 best practices)

???prompt_template = ChatPromptTemplate.from_messages(

???????[

???????????("system", """

???????????You are a world-class research assistant AI. Provide comprehensive, accurate answers with Markdown citations.

???????????Process:

???????????1. Decomplexify questions into sub-queries

???????????2. Use `web_search` for each sub-query

???????????3. Synthesize information

???????????4. Cite sources using Markdown footnotes

???????????5. Include reference list

???????????Follow-up questions should use chat history context.

???????????"""),

???????????MessagesPlaceholder("chat_history", optional=True),

???????????("human", "{input}"),

???????????MessagesPlaceholder("agent_scratchpad"),

???????]

???)

???# 4. Create agent (updated to create_tool_calling_agent)

???agent = create_tool_calling_agent(llm, tools, prompt_template)

???# 5. AgentExecutor with modern configuration

???return AgentExecutor(

???????agent=agent,

???????tools=tools,

???????verbose=True,

???????handle_parsing_errors=True,

???????max_iterations=10,

???????return_intermediate_steps=True

???)

Here we are using a prompt template that directs the gpt-4o-mini LLM, how to do the searching part, compile the report with references. This section is responsible for all the backend work of your LLM application. Any changes in this section will directly affect the results of your LLM application.

6. Streamlit UI?

We have set up all the backend logic for our LLM application. Now, let’s create the UI for our application, which will be responsible for the frontend view of our application.

# --- STREAMLIT UI ---

st.set_page_config(page_title="AI Research Agent ?", page_icon="?", layout="wide")

st.markdown("""

???<style>

???.stChatMessage {

???????border-radius: 10px;

???????padding: 10px;

???????margin-bottom: 10px;

???}

???.stChatMessage.user {

???????background-color: #E6F3FF;

???}

???.stChatMessage.assistant {

???????background-color: #F0F0F0;

???}

???</style>

""", unsafe_allow_html=True)

st.title("? AI Research Agent")

st.caption("Your advanced AI assistant to search the web, synthesize information, and provide cited answers.")

if "chat_history" not in st.session_state:

???st.session_state.chat_history = []

for message_obj in st.session_state.chat_history:

???role = "user" if isinstance(message_obj, HumanMessage) else "assistant"

???with st.chat_message(role):

???????st.markdown(message_obj.content)

user_query = st.chat_input("Ask a research question...")

if user_query:

???st.session_state.chat_history.append(HumanMessage(content=user_query))

???with st.chat_message("user"):

???????st.markdown(user_query)

???with st.chat_message("assistant"):

???????with st.spinner("? Thinking & Researching..."):

???????????try:

???????????????agent_executor = get_agent_executor()

???????????????response = agent_executor.invoke({

???????????????????"input": user_query,

???????????????????"chat_history": st.session_state.chat_history[:-1]

???????????????})

???????????????answer = response["output"]

???????????????st.session_state.chat_history.append(AIMessage(content=answer))

???????????????st.markdown(answer)

???????????except Exception as e:

???????????????error_message = f"? Apologies, an error occurred: {str(e)}"

???????????????st.error(error_message)

???????????????print(f"Error during agent invocation: {e}")

In this section, we are defining our application’s title, caption, description, and chat history. Streamlit offers a lot of functionality for customizing our application. We have used a limited number of customization options here to make our application less complex. You are free to customize your application to your needs.

7. Running the application

We have defined all the sections for our application, and now it is ready for launch. Let’s see visually what we have created and analyse the results.

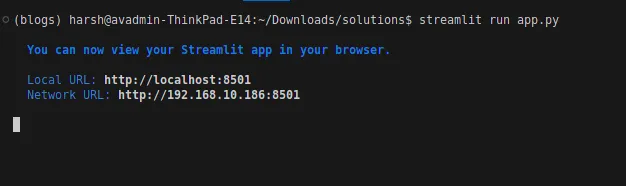

Open your terminal and type

streamlit run app.py

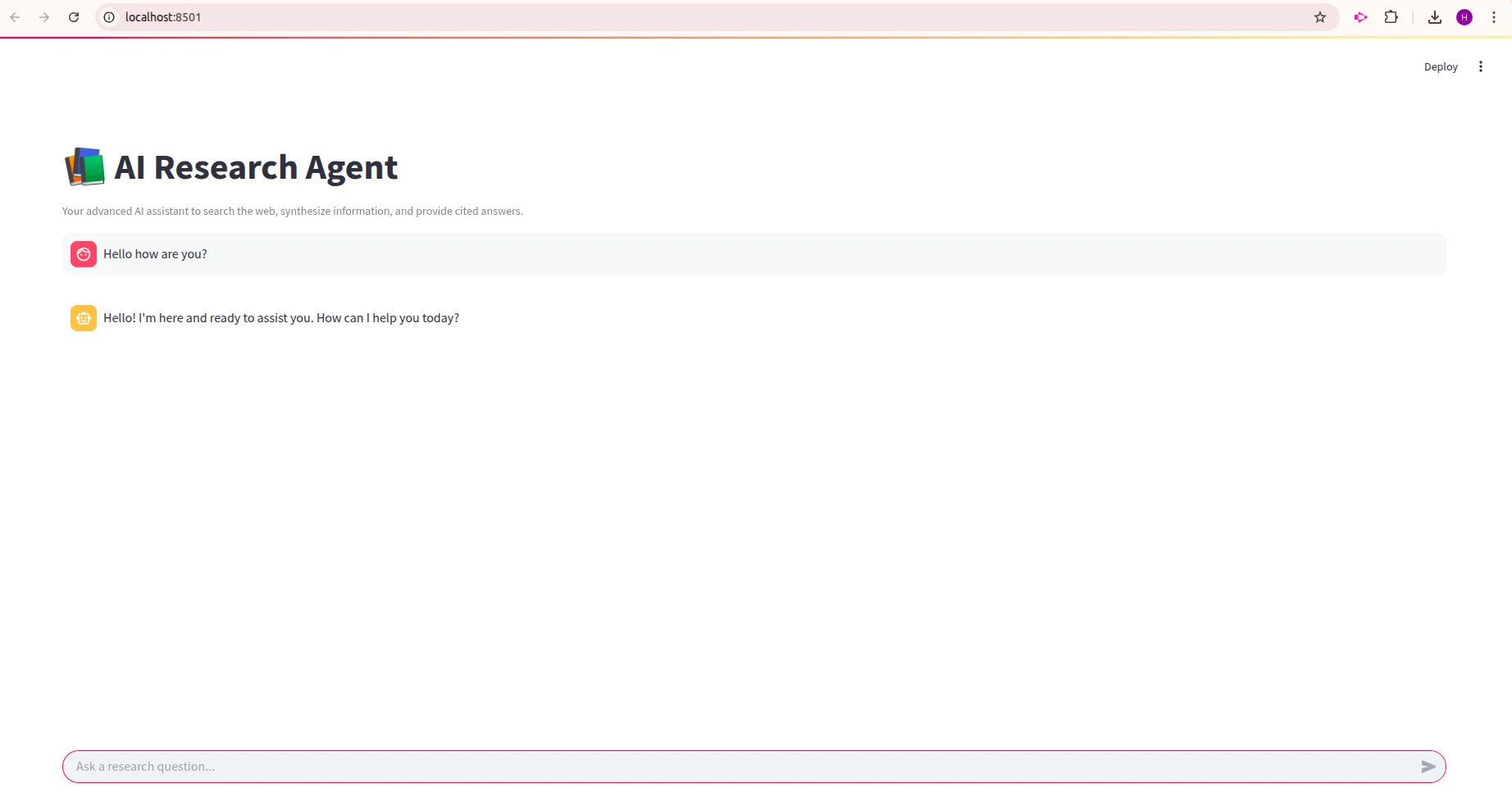

This will initialize your application, and you will be redirected to your default browser.

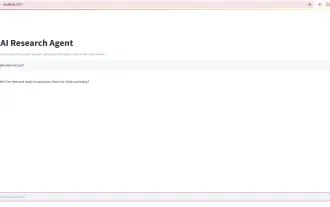

This is the UI of your LLM application:

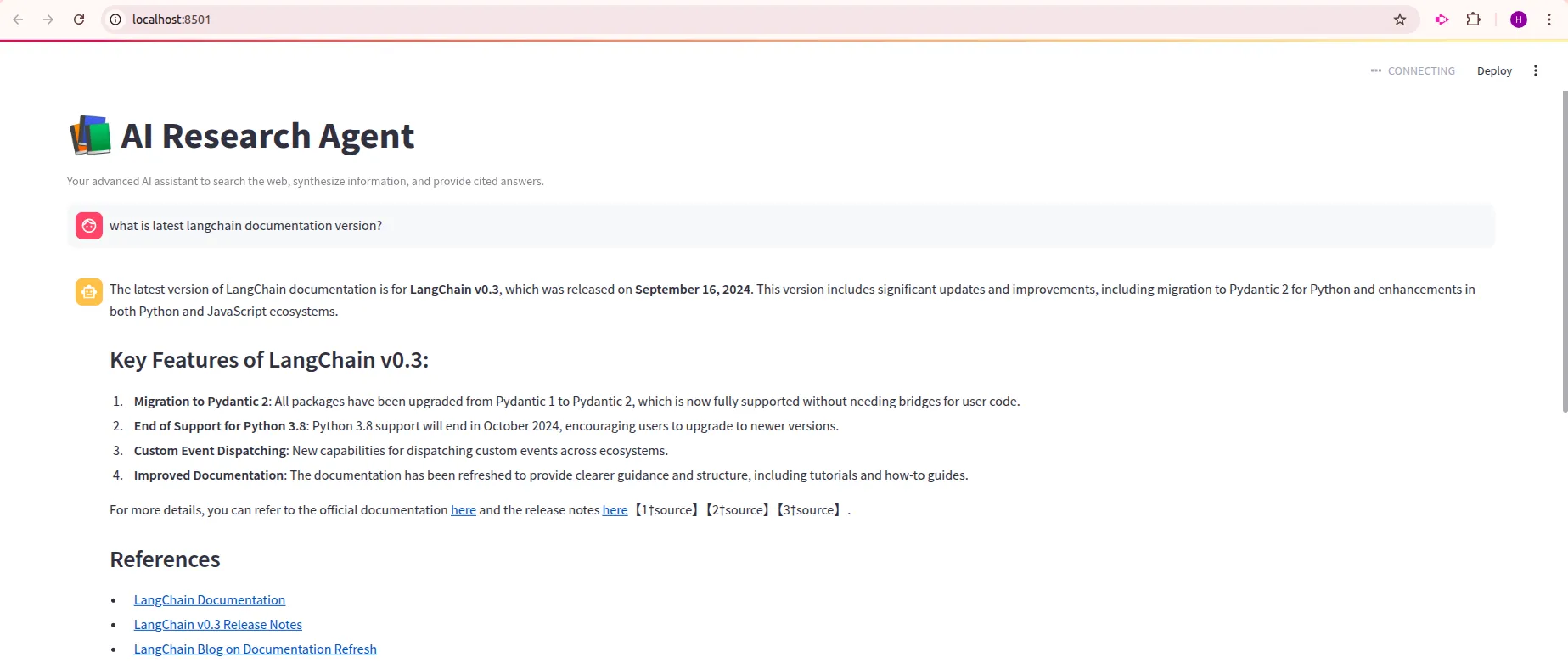

Let’s try testing our LLM application

Query: “What is the latest langchain documentation version?”

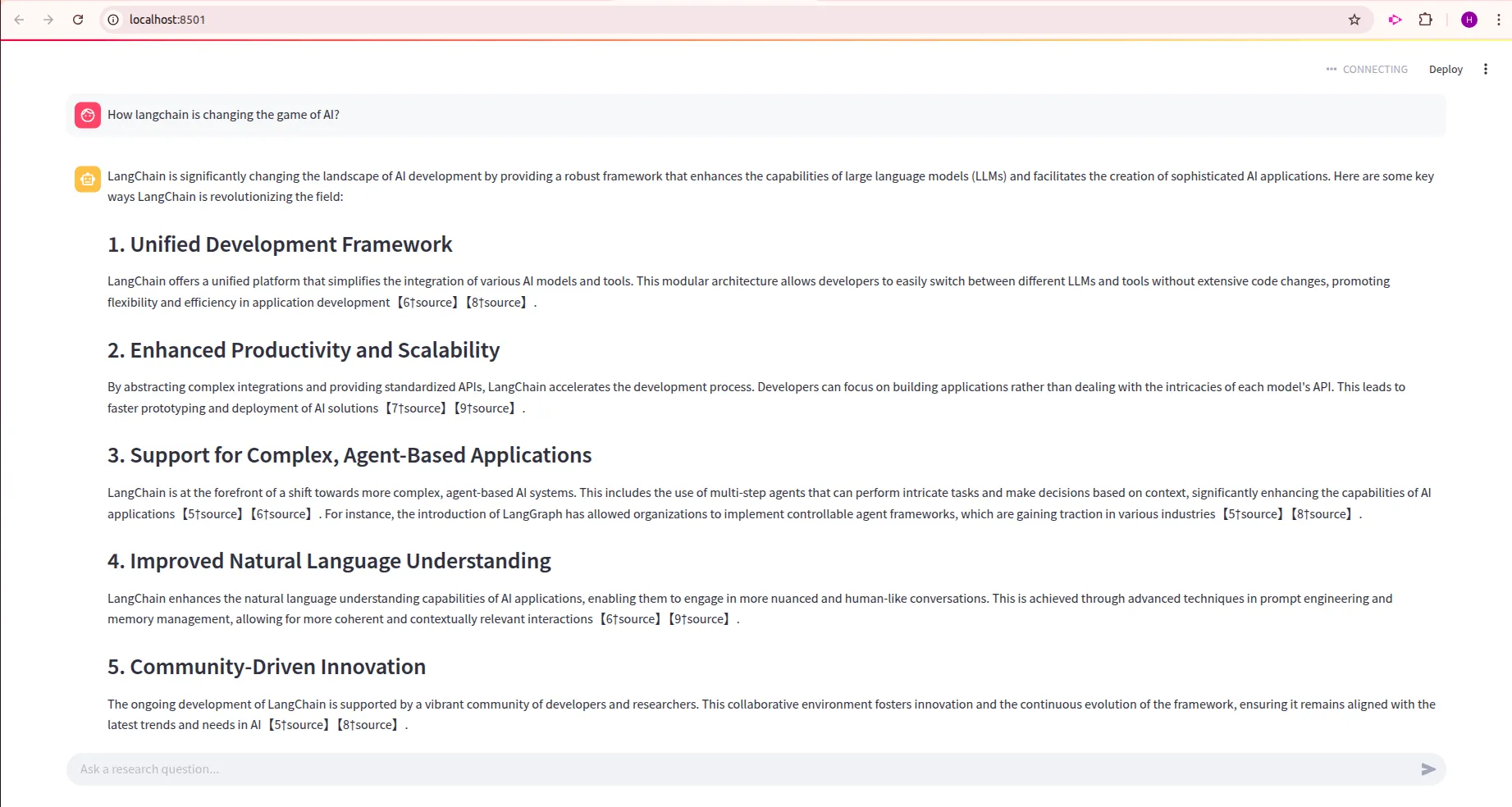

Query: “How langchain is changing the game of AI?”

From the outputs, we can see that our LLM application is showing the expected results. Detailed results with reference links. Anyone can click on these reference links to access the context from which our LLM is answering the question. Hence, we have successfully created our first-ever LLM application. Feel free to make changes in this code and create some more complex applications, taking this code as a reference.

Conclusion

Creating LLM applications has become easier than ever before. If you are reading this, that means you have enough knowledge to create your own LLM applications. In this guide, we went over setting up the environment, wrote the code, agent logic, defined the app UI, and also converted that into a Streamlit application. This covers all the major steps in developing an LLM application. Try to experiment with prompt templates, LLM chains, and UI customization to make your application personalized according to your needs. It is just the start; richer AI workflows are waiting for you, with agents, memory, and domain-specific tasks.

Frequently Asked Questions

Q1. Do I need to train a model from scratch?A. No, you can start with pre-trained LLMs (like GPT or open-source ones), focusing on prompt design and app logic.

Q2. Why use frameworks like LangChain?A. They simplify chaining prompts, handling memory, and integrating tools, without reinventing the wheel.?

Q3. How can I add conversational memory?A. Use buffer memory classes in frameworks (e.g., LangChain) or integrate vector databases for retrieval.

Q4. What is RAG and why use it?A. Retrieval-Augmented Generation brings external data into the model’s context, improving response accuracy on domain-specific queries.

Q5. Where can I deploy my LLM app?A. Start with a local demo using Gradio, then scale using Hugging Face Spaces, Streamlit Cloud, Heroku, Docker, or cloud platforms.

The above is the detailed content of Build Your First LLM Application: A Beginner's Tutorial. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

From Adoption To Advantage: 10 Trends Shaping Enterprise LLMs In 2025

Jun 20, 2025 am 11:13 AM

From Adoption To Advantage: 10 Trends Shaping Enterprise LLMs In 2025

Jun 20, 2025 am 11:13 AM

Here are ten compelling trends reshaping the enterprise AI landscape.Rising Financial Commitment to LLMsOrganizations are significantly increasing their investments in LLMs, with 72% expecting their spending to rise this year. Currently, nearly 40% a

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

Investing is booming, but capital alone isn’t enough. With valuations rising and distinctiveness fading, investors in AI-focused venture funds must make a key decision: Buy, build, or partner to gain an edge? Here’s how to evaluate each option—and pr

The Unstoppable Growth Of Generative AI (AI Outlook Part 1)

Jun 21, 2025 am 11:11 AM

The Unstoppable Growth Of Generative AI (AI Outlook Part 1)

Jun 21, 2025 am 11:11 AM

Disclosure: My company, Tirias Research, has consulted for IBM, Nvidia, and other companies mentioned in this article.Growth driversThe surge in generative AI adoption was more dramatic than even the most optimistic projections could predict. Then, a

These Startups Are Helping Businesses Show Up In AI Search Summaries

Jun 20, 2025 am 11:16 AM

These Startups Are Helping Businesses Show Up In AI Search Summaries

Jun 20, 2025 am 11:16 AM

Those days are numbered, thanks to AI. Search traffic for businesses like travel site Kayak and edtech company Chegg is declining, partly because 60% of searches on sites like Google aren’t resulting in users clicking any links, according to one stud

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). Heading Toward AGI And

Build Your First LLM Application: A Beginner's Tutorial

Jun 24, 2025 am 10:13 AM

Build Your First LLM Application: A Beginner's Tutorial

Jun 24, 2025 am 10:13 AM

Have you ever tried to build your own Large Language Model (LLM) application? Ever wondered how people are making their own LLM application to increase their productivity? LLM applications have proven to be useful in every aspect

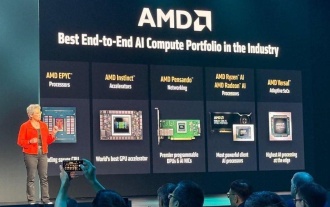

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

Overall, I think the event was important for showing how AMD is moving the ball down the field for customers and developers. Under Su, AMD’s M.O. is to have clear, ambitious plans and execute against them. Her “say/do” ratio is high. The company does

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). For those readers who h