Artificial intelligence's rapid advancement relies heavily on language models for both comprehending and generating human language. Base LLMs and Instruction-Tuned LLMs represent two distinct approaches to language processing. This article delves into the key differences between these model types, covering their training methods, characteristics, applications, and responses to specific queries.

Table of Contents

- What are Base LLMs?

- Training

- Key Features

- Functionality

- Applications

- What are Instruction-Tuned LLMs?

- Training

- Key Features

- Functionality

- Applications

- Instruction-Tuning Methods

- Advantages of Instruction-Tuned LLMs

- Output Comparison and Analysis

- Base LLM Example Interaction

- Instruction-Tuned LLM Example Interaction

- Base LLM vs. Instruction-Tuned LLM: A Comparison

- Conclusion

What are Base LLMs?

Base LLMs are foundational language models trained on massive, unlabeled text datasets sourced from the internet, books, and academic papers. They learn to identify and predict linguistic patterns based on statistical relationships within this data. This initial training fosters versatility and a broad knowledge base across diverse topics.

Training

Base LLMs undergo initial AI training on extensive datasets to grasp and predict language patterns. This enables them to generate coherent text and respond to various prompts, though further fine-tuning may be needed for specialized tasks or domains.

(Image: Base LLM training process)

Key Features

- Comprehensive Language Understanding: Their diverse training data provides a general understanding of numerous subjects.

- Adaptability: Designed for general use, they respond to a wide array of prompts.

- Instruction-Agnostic: They may interpret instructions loosely, often requiring rephrasing for desired results.

- Contextual Awareness (Limited): They maintain context in short conversations but struggle with longer dialogues.

- Creative Text Generation: They can generate creative content like stories or poems based on prompts.

- Generalized Responses: While informative, their answers may lack depth and specificity.

Functionality

Base LLMs primarily predict the next word in a sequence based on training data. They analyze input text and generate responses based on learned patterns. However, they aren't specifically designed for question answering or conversation, leading to generalized rather than precise responses. Their functionality includes:

- Text Completion: Completing sentences or paragraphs based on context.

- Content Generation: Creating articles, stories, or other written content.

- Basic Question Answering: Responding to simple questions with general information.

Applications

- Content generation

- Providing a foundational language understanding

What are Instruction-Tuned LLMs?

Instruction-Tuned LLMs build upon base models, undergoing further fine-tuning to understand and follow specific instructions. This involves supervised fine-tuning (SFT), where the model learns from instruction-prompt-response pairs. Reinforcement Learning with Human Feedback (RLHF) further enhances performance.

Training

Instruction-Tuned LLMs learn from examples demonstrating how to respond to clear prompts. This fine-tuning improves their ability to answer specific questions, stay on task, and accurately understand requests. Training uses a large dataset of sample instructions and corresponding expected model behavior.

(Image: Instruction dataset creation and instruction tuning process)

Key Features

- Improved Instruction Following: They excel at interpreting complex prompts and following multi-step instructions.

- Complex Request Handling: They can decompose intricate instructions into manageable parts.

- Task Specialization: Ideal for specific tasks like summarization, translation, or structured advice.

- Responsive to Tone and Style: They adapt responses based on the requested tone or formality.

- Enhanced Contextual Understanding: They maintain context better in longer interactions, suitable for complex dialogues.

- Higher Accuracy: They provide more precise answers due to specialized instruction-following training.

Functionality

Unlike simply completing text, Instruction-Tuned LLMs prioritize following instructions, resulting in more accurate and satisfying outcomes. Their functionality includes:

- Task Execution: Performing tasks like summarization, translation, or data extraction based on user instructions.

- Contextual Adaptation: Adjusting responses based on conversational context for coherent interactions.

- Detailed Responses: Providing in-depth answers, often including examples or explanations.

Applications

- Tasks requiring high customization and specific formats

- Applications needing enhanced responsiveness and accuracy

Instruction-Tuning Techniques

Instruction-Tuned LLMs can be summarized as: Base LLMs Further Tuning RLHF

- Foundational Base: Base LLMs provide the initial broad language understanding.

- Instructional Training: Further tuning trains the base LLM on a dataset of instructions and desired responses, improving direction-following.

- Feedback Refinement: RLHF allows the model to learn from human preferences, improving helpfulness and alignment with user goals.

- Result: Instruction-Tuned LLMs – knowledgeable and adept at understanding and responding to specific requests.

Advantages of Instruction-Tuned LLMs

- Greater Accuracy and Relevance: Fine-tuning enhances expertise in specific areas, providing precise and relevant answers.

- Tailored Performance: They excel in targeted tasks, adapting to specific business or application needs.

- Expanded Applications: They have broad applications across various industries.

Output Comparison and Analysis

Base LLM Example Interaction

Query: “Who won the World Cup?”

Base LLM Response: “I don’t know; there have been multiple winners.” (Technically correct but lacks specificity.)

Instruction-Tuned LLM Example Interaction

Query: “Who won the World Cup?”

Instruction-Tuned LLM Response: “The French national team won the FIFA World Cup in 2018, defeating Croatia in the final.” (Informative, accurate, and contextually relevant.)

Base LLMs generate creative but less precise responses, better suited for general content. Instruction-Tuned LLMs demonstrate improved instruction understanding and execution, making them more effective for accuracy-demanding applications. Their adaptability and contextual awareness enhance user experience.

Base LLM vs. Instruction-Tuned LLM: A Comparison

| Feature | Base LLM | Instruction-Tuned LLM |

|---|---|---|

| Training Data | Vast amounts of unlabeled data | Fine-tuned on instruction-specific data |

| Instruction Following | May interpret instructions loosely | Better understands and follows directives |

| Consistency/Reliability | Less consistent and reliable for specific tasks | More consistent, reliable, and task-aligned |

| Best Use Cases | Exploring ideas, general questions | Tasks requiring high customization |

| Capabilities | Broad language understanding and prediction | Refined, instruction-driven performance |

Conclusion

Base LLMs and Instruction-Tuned LLMs serve distinct purposes in language processing. Instruction-Tuned LLMs excel at specialized tasks and instruction following, while Base LLMs provide broader language comprehension. Instruction tuning significantly enhances language model capabilities and yields more impactful results.

The above is the detailed content of Base LLM vs Instruction-Tuned LLM. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

Investing is booming, but capital alone isn’t enough. With valuations rising and distinctiveness fading, investors in AI-focused venture funds must make a key decision: Buy, build, or partner to gain an edge? Here’s how to evaluate each option—and pr

The Unstoppable Growth Of Generative AI (AI Outlook Part 1)

Jun 21, 2025 am 11:11 AM

The Unstoppable Growth Of Generative AI (AI Outlook Part 1)

Jun 21, 2025 am 11:11 AM

Disclosure: My company, Tirias Research, has consulted for IBM, Nvidia, and other companies mentioned in this article.Growth driversThe surge in generative AI adoption was more dramatic than even the most optimistic projections could predict. Then, a

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). Heading Toward AGI And

Build Your First LLM Application: A Beginner's Tutorial

Jun 24, 2025 am 10:13 AM

Build Your First LLM Application: A Beginner's Tutorial

Jun 24, 2025 am 10:13 AM

Have you ever tried to build your own Large Language Model (LLM) application? Ever wondered how people are making their own LLM application to increase their productivity? LLM applications have proven to be useful in every aspect

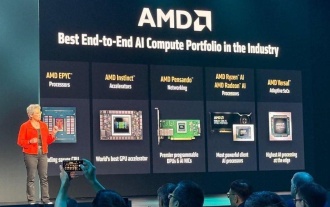

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

Overall, I think the event was important for showing how AMD is moving the ball down the field for customers and developers. Under Su, AMD’s M.O. is to have clear, ambitious plans and execute against them. Her “say/do” ratio is high. The company does

Kimi K2: The Most Powerful Open-Source Agentic Model

Jul 12, 2025 am 09:16 AM

Kimi K2: The Most Powerful Open-Source Agentic Model

Jul 12, 2025 am 09:16 AM

Remember the flood of open-source Chinese models that disrupted the GenAI industry earlier this year? While DeepSeek took most of the headlines, Kimi K1.5 was one of the prominent names in the list. And the model was quite cool.

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). For those readers who h

7 Key Highlights from Geoffrey Hinton on Superintelligent AI - Analytics Vidhya

Jun 21, 2025 am 10:54 AM

7 Key Highlights from Geoffrey Hinton on Superintelligent AI - Analytics Vidhya

Jun 21, 2025 am 10:54 AM

If the Godfather of AI tells you to “train to be a plumber,” you know it’s worth listening to—at least that’s what caught my attention. In a recent discussion, Geoffrey Hinton talked about the potential future shaped by superintelligent AI, and if yo