Backend Development

Backend Development

Python Tutorial

Python Tutorial

Understand the Python crawler parser BeautifulSoup4 in one article

Understand the Python crawler parser BeautifulSoup4 in one article

Understand the Python crawler parser BeautifulSoup4 in one article

Jul 12, 2022 pm 04:56 PMThis article brings you relevant knowledge about Python, which mainly sorts out issues related to the crawler parser BeautifulSoup4. Beautiful Soup is a Python that can extract data from HTML or XML files. Library, which can implement the usual methods of document navigation, search, and modification of documents through your favorite converter. Let’s take a look at it. I hope it will be helpful to everyone.

[Related recommendations: Python3 video tutorial ]

1. Introduction to the BeautifulSoup4 library

1. Introduction

Beautiful Soup is a Python library that can extract data from HTML or XML files. It can implement the usual ways of document navigation, search, and modification of documents through your favorite converter. Beautiful Soup will help You save hours or even days of work time.

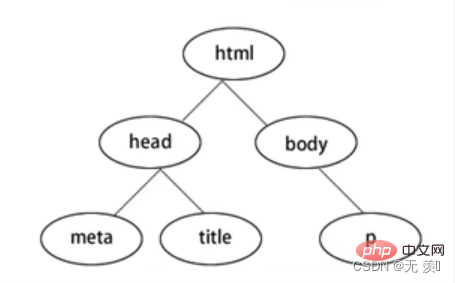

BeautifulSoup4 converts the web page into a DOM tree:

2. Download the module

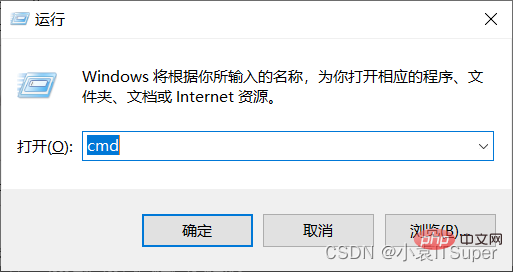

1. Click on the window computerwin key R, enter: cmd

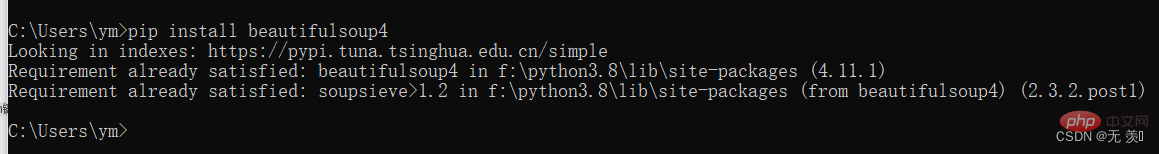

##2. Install beautifulsoup4, Enter the corresponding pip command : pip install beautifulsoup4 . I have already installed the version that appears and the installation was successful.

3. Guide package

form?bs4?import?BeautifulSoup3. Parsing libraryBeautifulSoup actually relies on the parser when parsing. In addition to supporting the HTML parser in the Python standard library, it also supports some third-party parsers ( Such as lxml):

| Usage | Advantages | Disadvantages | |

|---|---|---|---|

BeautifulSoup(html,'html.parser') | Python's built-in standard library, execution speed Moderate, strong document fault tolerancePython 2.7.3 and versions before Python3.2.2 have poor document fault tolerance | ||

BeautifulSoup(html,'lxml') | Fast speed, strong document fault toleranceNeed to install C language library | ||

BeautifulSoup(html,'xml' | Fast speed, the only parser that supports XMLRequires the installation of C language library | ||

BeautifulSoup(html,'htm5llib') | The best fault tolerance, browser way Parse documents and generate documents in HTMLS formatSlow speed, does not rely on external extensions |

The above is the detailed content of Understand the Python crawler parser BeautifulSoup4 in one article. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

PHP calls AI intelligent voice assistant PHP voice interaction system construction

Jul 25, 2025 pm 08:45 PM

PHP calls AI intelligent voice assistant PHP voice interaction system construction

Jul 25, 2025 pm 08:45 PM

User voice input is captured and sent to the PHP backend through the MediaRecorder API of the front-end JavaScript; 2. PHP saves the audio as a temporary file and calls STTAPI (such as Google or Baidu voice recognition) to convert it into text; 3. PHP sends the text to an AI service (such as OpenAIGPT) to obtain intelligent reply; 4. PHP then calls TTSAPI (such as Baidu or Google voice synthesis) to convert the reply to a voice file; 5. PHP streams the voice file back to the front-end to play, completing interaction. The entire process is dominated by PHP to ensure seamless connection between all links.

How to use PHP combined with AI to achieve text error correction PHP syntax detection and optimization

Jul 25, 2025 pm 08:57 PM

How to use PHP combined with AI to achieve text error correction PHP syntax detection and optimization

Jul 25, 2025 pm 08:57 PM

To realize text error correction and syntax optimization with AI, you need to follow the following steps: 1. Select a suitable AI model or API, such as Baidu, Tencent API or open source NLP library; 2. Call the API through PHP's curl or Guzzle and process the return results; 3. Display error correction information in the application and allow users to choose whether to adopt it; 4. Use php-l and PHP_CodeSniffer for syntax detection and code optimization; 5. Continuously collect feedback and update the model or rules to improve the effect. When choosing AIAPI, focus on evaluating accuracy, response speed, price and support for PHP. Code optimization should follow PSR specifications, use cache reasonably, avoid circular queries, review code regularly, and use X

python seaborn jointplot example

Jul 26, 2025 am 08:11 AM

python seaborn jointplot example

Jul 26, 2025 am 08:11 AM

Use Seaborn's jointplot to quickly visualize the relationship and distribution between two variables; 2. The basic scatter plot is implemented by sns.jointplot(data=tips,x="total_bill",y="tip",kind="scatter"), the center is a scatter plot, and the histogram is displayed on the upper and lower and right sides; 3. Add regression lines and density information to a kind="reg", and combine marginal_kws to set the edge plot style; 4. When the data volume is large, it is recommended to use "hex"

PHP integrated AI emotional computing technology PHP user feedback intelligent analysis

Jul 25, 2025 pm 06:54 PM

PHP integrated AI emotional computing technology PHP user feedback intelligent analysis

Jul 25, 2025 pm 06:54 PM

To integrate AI sentiment computing technology into PHP applications, the core is to use cloud services AIAPI (such as Google, AWS, and Azure) for sentiment analysis, send text through HTTP requests and parse returned JSON results, and store emotional data into the database, thereby realizing automated processing and data insights of user feedback. The specific steps include: 1. Select a suitable AI sentiment analysis API, considering accuracy, cost, language support and integration complexity; 2. Use Guzzle or curl to send requests, store sentiment scores, labels, and intensity information; 3. Build a visual dashboard to support priority sorting, trend analysis, product iteration direction and user segmentation; 4. Respond to technical challenges, such as API call restrictions and numbers

python list to string conversion example

Jul 26, 2025 am 08:00 AM

python list to string conversion example

Jul 26, 2025 am 08:00 AM

String lists can be merged with join() method, such as ''.join(words) to get "HelloworldfromPython"; 2. Number lists must be converted to strings with map(str, numbers) or [str(x)forxinnumbers] before joining; 3. Any type list can be directly converted to strings with brackets and quotes, suitable for debugging; 4. Custom formats can be implemented by generator expressions combined with join(), such as '|'.join(f"[{item}]"foriteminitems) output"[a]|[

python connect to sql server pyodbc example

Jul 30, 2025 am 02:53 AM

python connect to sql server pyodbc example

Jul 30, 2025 am 02:53 AM

Install pyodbc: Use the pipinstallpyodbc command to install the library; 2. Connect SQLServer: Use the connection string containing DRIVER, SERVER, DATABASE, UID/PWD or Trusted_Connection through the pyodbc.connect() method, and support SQL authentication or Windows authentication respectively; 3. Check the installed driver: Run pyodbc.drivers() and filter the driver name containing 'SQLServer' to ensure that the correct driver name is used such as 'ODBCDriver17 for SQLServer'; 4. Key parameters of the connection string

python pandas melt example

Jul 27, 2025 am 02:48 AM

python pandas melt example

Jul 27, 2025 am 02:48 AM

pandas.melt() is used to convert wide format data into long format. The answer is to define new column names by specifying id_vars retain the identification column, value_vars select the column to be melted, var_name and value_name, 1.id_vars='Name' means that the Name column remains unchanged, 2.value_vars=['Math','English','Science'] specifies the column to be melted, 3.var_name='Subject' sets the new column name of the original column name, 4.value_name='Score' sets the new column name of the original value, and finally generates three columns including Name, Subject and Score.

Optimizing Python for Memory-Bound Operations

Jul 28, 2025 am 03:22 AM

Optimizing Python for Memory-Bound Operations

Jul 28, 2025 am 03:22 AM

Pythoncanbeoptimizedformemory-boundoperationsbyreducingoverheadthroughgenerators,efficientdatastructures,andmanagingobjectlifetimes.First,usegeneratorsinsteadofliststoprocesslargedatasetsoneitematatime,avoidingloadingeverythingintomemory.Second,choos